News:

> Selected for the TEI 2015 student Design challenge @ Stanford University

> Received Submission Invitation and nomination - [the next idea] voestalpine Art & Technology Grant 2015 - Ars Electronica

Coverage:

Electrischer Reporter (ZDF info)

Creators project

Huffingtonpost (Arts and Culture)

Wired

Deutsche-mittelstands-nachrichten

The insider review

Wearable World News

geekofyou

Hyperallergic

The aim of my research is to understand the modalities of ownership and control when a learning system is employed in the scenario with capabilities like forced feedback and automation.

I have considered exploring the behaviours, relationships and nuances of people with technology through a physical interface employing Forced_Feedback systems(in a specific context of drawing here) which would claim to be empathetic enough while aiding us in our operations by learning with us and giving us feedback.

The finding of this research would then be used as considerations while designing future forced feedback enabled systems.

09:12:2014 - final project presentation

Final thesis presentation video @ CIID - DECEMBER 9th | 2014

Presentation slides in depth

Final exhibition:

How it all started:

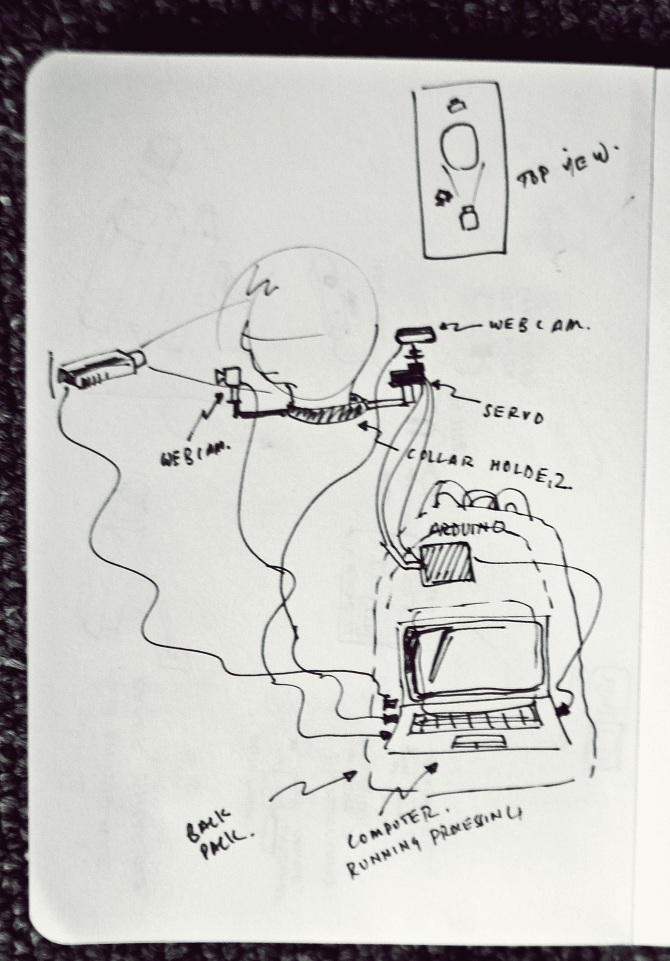

The thought of embodiment of technology is intriguing with the added benefit of one day we might use them to understand and improve upon certain acts. That’s what development in the world of wearables are promising these days(have a look at this Intel wearable finalist project). These aside, I’m a big fan of DIY robotics and always wanted to have something on my hands. So in the summers of Copenhagen(while I had two weeks summer break), I decided to do some side projects. I set myself a goal of three projects..

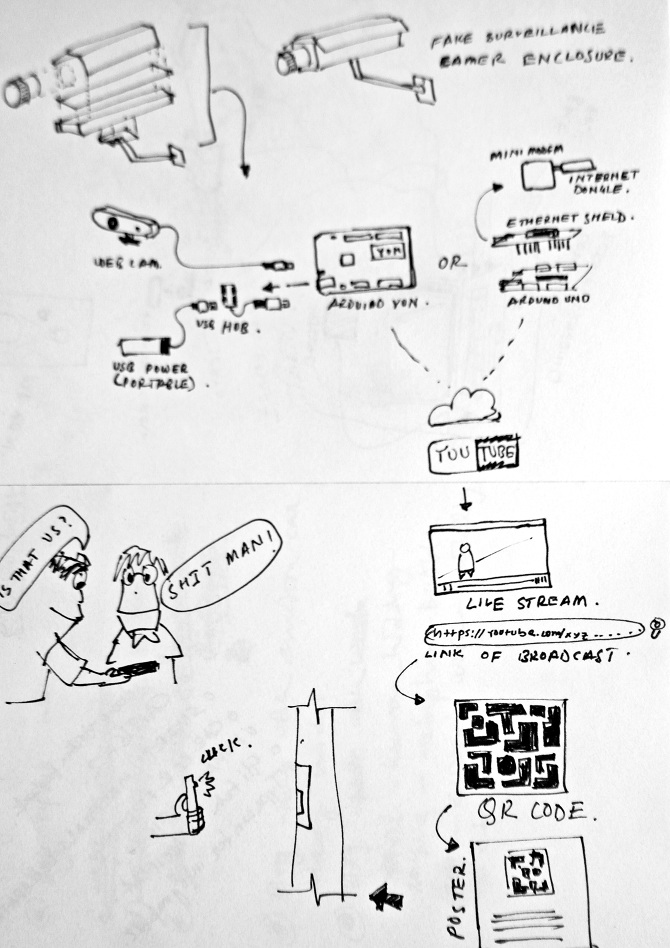

There is no need trying to understand these pictures. Just go to my blog

But I could make up only two of them and one of the ideas changed and just came to me while smoking on the bridge. So I made them - just for fun and to keep my creative juice running.

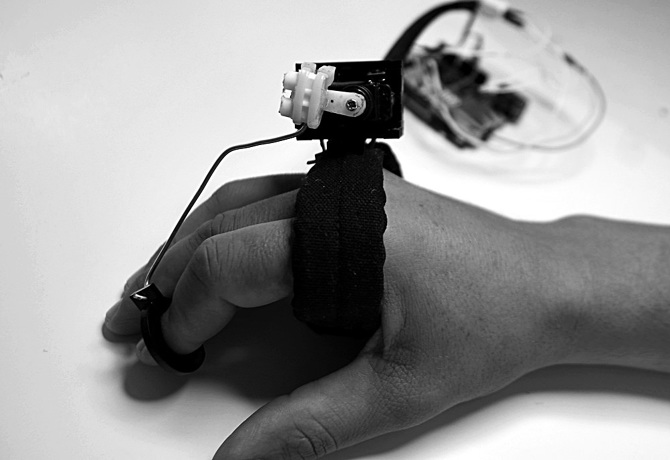

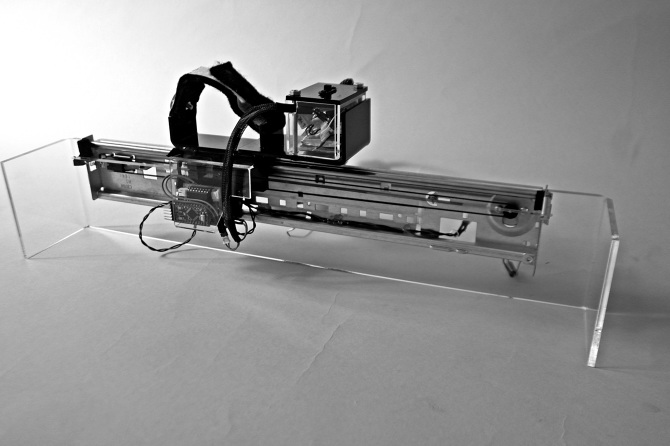

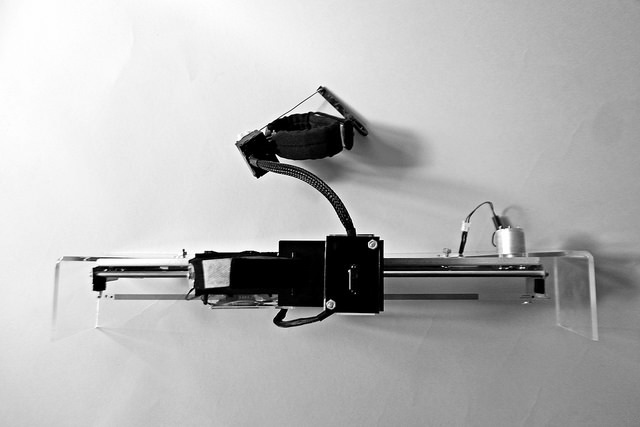

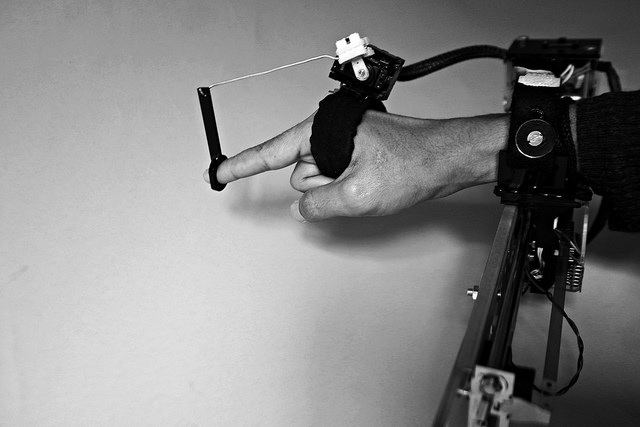

Fig: Forced Finger project

Fig: Ostrich Project

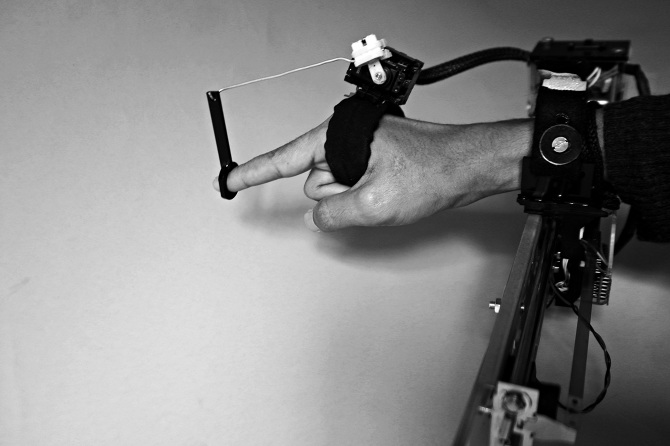

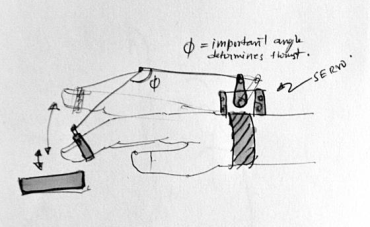

The inspiration behind the Forced Finger Project is a Vision for future machines - a simple machine that is embodied with us, learning from us and then teaching us what we intend to learn with technology as aid. Here technology is used as to understand human beings just as pervasive ubiquitous computing is supposed to do. A small servo is hacked to get the potentiometer pin from it and then it is feed to the Arduino for measuring it's position and recording it. For testing purpose an Arduino Yun is being used. In future it is shrinked to use an Arduino Pro-mini. It is a minor example of forced feedback systems being implemented with a simple exoskeleton robotics.

The Understanding:

So in laymen’s term: forced feedback is another type of feedback system often used in scenerios to emulate a physical state of action. Too heavy? Here’s a wiki link . Just read through it. It’s pretty simple and is more of a psychology then a technique itself.

Earlier in 2014, during a class by Bill Verplank called motors and music , a group of students, including me, were asked to design for future cars. “How it would be if we don’t have to drive anymore?” - In Bill Verplank’s words, that was the brief. The challenge was to design some engaging attributes using haptic feedback and forced feedback systems for future cars. What makes driving a car is the feel while we are driving the car. We can feel the engine, the sounds and the vibrations. Those experiences are what makes it as a whole. When automatic control systems started getting used first in airplanes to control the flight parameters, the pilots felt a need to artificially receive some feedbacks for their actions as they didn't know what was happening. A response is always necessary to be felt as a part of the system. Back then first forced feedback system was developed. While we have progressed by many decades from that period, it still feels as if this system is not developed or given emphasis as much as other systems when it comes to define the future of interactions. All we understand when it comes to a feedback mechanism is haptic with some vibration systems. While if we look back, it is always essential for us to have a sense of conformation, a sense of being in control. Recently new arguments have been raised discussing the necessity of a feedback system in control loops; as humans to feel like being a part of the system and a sense of ownership.

If we look at the forced feedback systems, those have been in use for a long time in various forms around us. A classic example is a spring loaded old ceramic toggle switch which needs some force to operate it and you feel the switch is being operated by the rebound you get from the tensioned spring inside the switch. Nowadays when our approaches are moving towards developing new tangible interactions, things which operate out in the real world and with less screens, a sense of their operation becomes necessary, for if we to get some feedback. We perceive something by our senses. Already the world is full of visual clutter. This brings us to explore our other senses – on of them is the sense of touch.

While all these have been inspiring to design feedback systems for our phones, game controllers, I feel there could be other ways where the feedback system could do more than just delivering the conformation of a happening. What happens when forced feedback systems are combined with intelligent machines? How do they help us? Or will they help us at all?

Those questions led me to develop and explore a system comprising of a forced feedback loop being utilized not just as a confirmation act but as an aid towards learning.

Exploration through Design

Here I’m exploring how forced feedback systems could be used in multiple situations and not just for the feel, conformity or presence of an activity with machines. One way to look at it would be to see when machines act as learning portals; how we can evolve with this facet that they doesn't become too much overwhelming? What are the possibilities that arise when we apply forced feedback systems with today’s technology to design for our near future? Can we provide more opportunities when machines have a physical attribute assisting us in our learnings and not just showing us what to learn on a flat screen? With those questions in mind I started exploring for a system that would help a user to perform a task by physically helping him to learn.

I remember when I started first learning alphabets my teachers used to hold my hand with the pen and trace on the paper multiple times, the letters. After letting me go I would do it over and over again and finally it achieved a muscle memory and I could do it by myself. I’m taking this metaphor of the importance of holding hands when learning a new skill. As a support, can technology help us here rather than just giving us directions of what to do and how to do as visual cuts on screens? So I decided to see what it feels like when a machine holds your hands while you are performing a task. How does it delivers these new experiences and expectations and how we react to it?

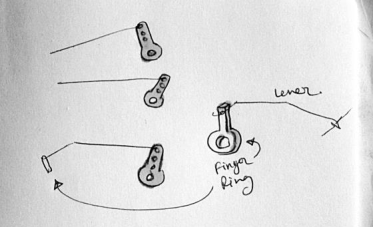

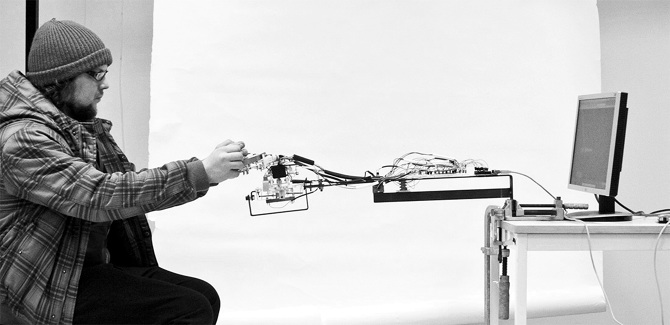

These led to an approach by research through design methodology. It is happening in two parts. I made my first prototype, a simple contraption that would sit over your fist and connect to your fingers through a lever. The starting point of exploration was through developing a single narrowed down approach to address the subject. So I decided to make a machine in two parts that might help you in learning playing piano. Here the technology used is very basic and are developed by tinkering with free open-source resources over the internet. It should be noted that it is not in the scope here to develop the best hand contraption to learn piano. While the first contraption only controls a single finger, the second contraption is able to have a control on the subject’s wrist as well, thus capable of modulating the hand movement over the whole keyboard.

Initial prototyping

For the approach in having discussions with people, I had to provide then with some experience to actually feel the phenomenon and comment on the same. I set on the process of making the contraptions. First I made a simple servo motor based forced feedback system to play along with our fingers. I wanted an embodiment of machines with our bodies and not look at it as a separate entity. The way this simple mechanism becomes interesting is in its actions which are passive. It’s not allocated to provide feedback during a task but is designed to follow us and provide feedback in a process. A simple process of sensing the way we move our fingers and then providing feedback through forcing our fingers in the same previous sequence.

Many people claimed that the experience of getting a forcing reaction by this small contraption, to repeat their actions, is very experiential and cannot be made understood by just speaking. They said they could see potential for people using it for learning things like music and instruments, if it is made a bit more embodied and flexible and not so machine like. Now I did not mention earlier to them, that my view for exploration here was to see if forced feedback could be used in teaching. It was their personal opinion. While some other do not like being forced by machines but do feel strongly about the idea that machines could show reactions and allow us to sense beyond hearing and seeing; like in usual human machine interactions.

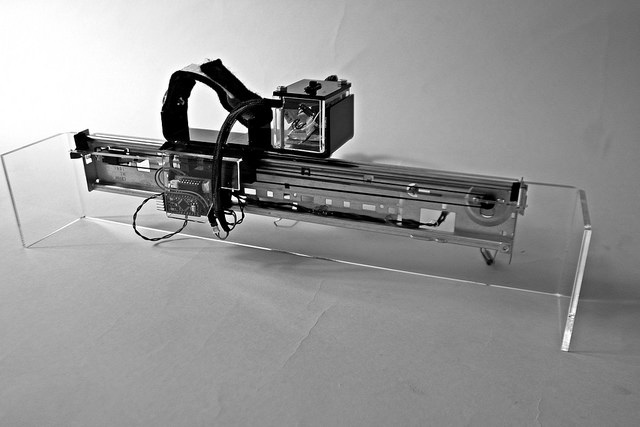

These feedbacks allowed me to develop the contraptions in a more specific direction. Using the embodied forced feedback mechanism in teaching piano, if that’s possible at all. Here I developed another extension to the previous contraption that would be able to control the wrist, looking at its movements. I took apart an old printer for the prototype and used its linear bed for making the extension (consult blog for technical details).

Hacking an old printer for linear contraption.

Initial linear encoder hacking

Success in linear encoder reading

I'll skip a bit of technical details. It took me some blog post reading, then some 3 nights coding to understand closed loop PID with torque control. Thanks for initial help to David and Jakob

Then I was able to log the linear positions and feed them back to the motors

Some more desk research:

documentation under progress..

Fig: Haptic forestry - an exploration in cutting down visual clutter from a heavily visual based interface of crane vehicle.

Fig: Prospectus For A Future Body - where the artist is trying to use the body already as a medium and memory of movements..

Fig: A choreography through a human medium by a human medium

Fig: Pro Glove - An interesting nudging interface to get you going fast..

Fig: Dexmo - an apparatus to feel the virtual in our hands

The next big step:

Soon there was a call for the final thesis. Since I was very interested in research and exploration of modalities and study of behaviours in relationship with technologies, I wanted to extend this project in relationship of forced feedback systems.

So I set myself a brief for the final thesis:

I have considered exploring a physical interface which would be empathetic enough to aid us in our operations by learning with us and giving us feedback, just like a responsible teacher would do. In my research, I want to explore how we can use nuances of forced feedback systems to learn new skills aided by developing muscle memory along the way. How can technology be used to support and elevate a muscle memory experience, if that’s possible at all. More precisely I would like to see if it is suitable to use or is possible to use forced feedback system to enhance fine motor muscle memory skills.

Here I’m exploring how forced feedback systems could be used in multiple situations and not just for the feel, conformity or presence of an activity with machines. One way to look at it would be to see when machines act as learning portals; how we can evolve with this facet that they doesn't become too much overwhelming? What are the possibilities that arise when we apply forced feedback systems with today’s technologies? Can we provide more opportunities when machines have a physical attribute assisting us in our learnings and not just showing us what to learn on a flat screen? With those questions in mind I started exploring for a system that would help a user to perform a task by physically helping him to learn.

Muscle memory has been used synonymously with motor learning, which is a form of procedural memory that involves consolidating a specific motor task into memory through repetition. When a movement is repeated over time, a long-term muscle memory is created for that task, eventually allowing it to be performed without conscious effort. I remember when I started first learning alphabets my teachers used to hold my hand with the pen and trace on the paper multiple times, the letters. After letting me go I would do it over and over again and finally it achieved a muscle memory and I could do it by myself. I’m taking this metaphor of the importance of holding hands when learning a new skill.

The key to building good muscle memory is to focus on the quality of the quantity of tasks performed. So I'd essentially be focusing on for now on a drawing experience and how it can be elevated by using technology in developing muscle memory and seek if it’s suitable to use forced feedback mechanisms for that purpose. Also if forced feedback can help develop muscle memory faster would also be explored in the process.

On a broader scale, the aim of this exploration is to add on to the knowledge in understanding the behaviour of human beings with learning machine and understanding the modalities of the forced feedback technology with that respect. When applied in the field of learning and assistance, what are the factors that govern our human perception and what are the nuances that needs to be understood to design such systems in future in a better empathetic way and less commanding manner. Thus a series of experiments will be performed and the knowledge generated in this specific context would be generalized for uses in future multiple contexts.

Development of probes:

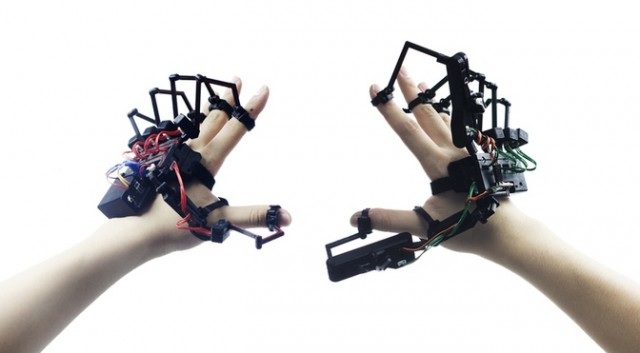

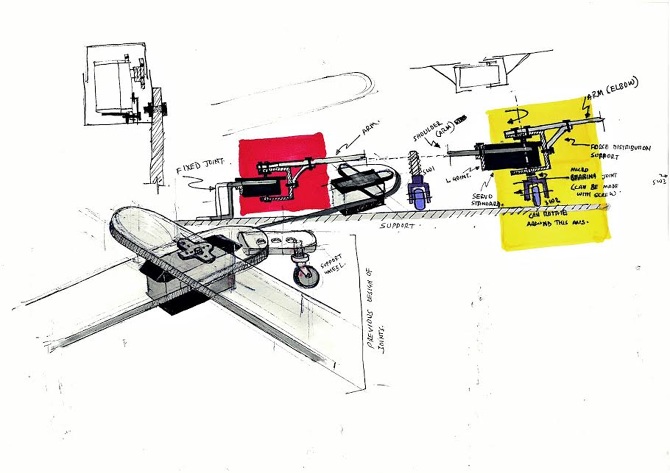

Since now it was to be employed on a much larger scale I needed to develop the probes. The problem with the linear arrangement was that it was just one dimensional. So make it a bit more approachable I started from ground up.

First step is a drawing machine which would be capable to draw something on paper. I quickly made a very crude arrangement with servos and a pen to test the Inverse Kinematics that would be employed on the 2D plane to enable a machine to draw. With this I also made a software that would be able to capture a live image and then send path data to the contraption, enabling it to draw. This was all done very rapidly in 1 and ½ day.

First Prototype:

Software for the machine to see and trace live images

Here is a video that I made for my advisor who was not present at that moment, just as a heads up of what was happening.

The aim of this system and software was to understand the negotiations people make when machine and humans have different perspective and same goal. How they complement each other or counter each other. Later a learning mechanism was also added so that the machine can see, perform, learn with user and then make an approximation guide.

Then I focused on the contraption itself. To make it very modular, portable and expandable between experiments.

I made a contraption- arm sized like a modular exoskeleton plantable on a table top. It took two nights in total to make it only to discover that while I was hacking the servo motors, they broke and the arrangement was heavy for standard servo motors to operate upon. Also one of the experiment was to record a user’s position and repeat it afterwards. So I needed good on-board memory for the microcontrollers so that they can store the position data. I wanted a computer free arrangement.

Second Prototype

Here is video of me explaining my advisor about this prototype. These videos are very low quality only for the record of existence.

Here at this point I also developed the code where the machine would be capable enough to learn from the user’s movement and then repeat itself back.

Final Prototype

After realizing that the structure was heavy enough, I decided to make another model. I redesigned some of the existing load structures so that they can take up the load from the hands of a person with-out stalling the servo motors. This again took a whole day to finish up properly.

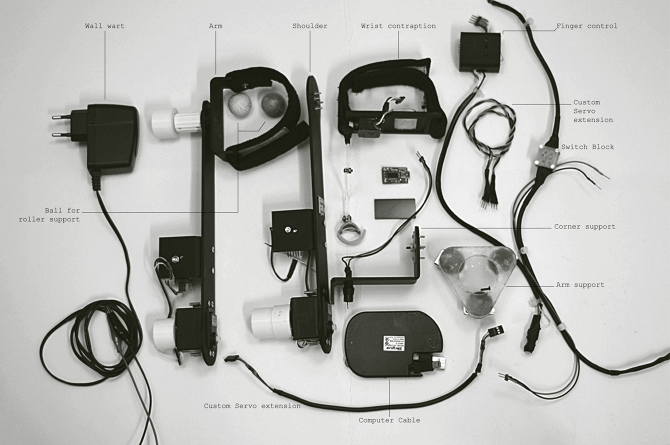

The modular probe break-down

At this point my prototype was somewhat ready. So I made another head’s up video for my advisor- showing him my status. Right now the few attributes of the probe are:

1] It can record a motion and replay it back.

2] It can record, learn and replay a motion back.

3] It can draw looking at an image.

4] It can look at an image, try to draw, learn from your motion, approximate both the conditions and try to draw it back.

It was brought to my attention that it would be better if we have some analytical data while people were performing. That way we have an analytical approach and have some quantitative value to the whole exploration. So then I arranged for a microcontroller based EMG and EEG sensor. I was sticking electrodes to the subjects to measure their myograms coming from their muscle reactions. That way I can see when they are reacting and when peak reactions happen.

EMG measurement system

Electromyograms

Some other arrangements and software preparations

Simple shape recognition and drawing arrangement

Simple shape recognition and pattern learning software.

The experiments themselves

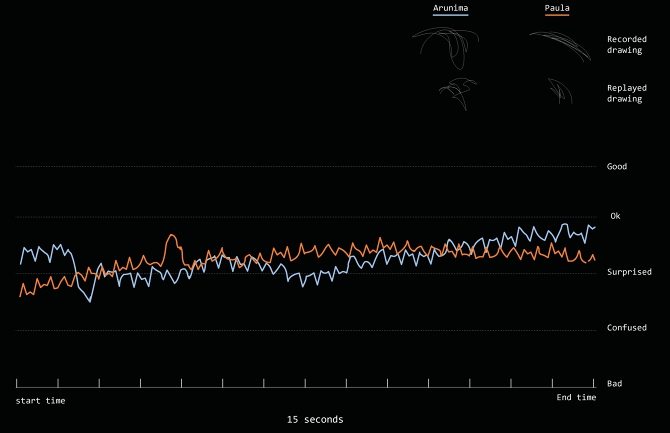

Experiment One:

Sense of trust

Dilemma

Empathy

Following and co-operation.

Scenario:

One person would be putting their hand in the machine. The machine is already recorded to someone else's motion. At the end he/she also gets a chance to make a drawing thus enabling the machine to log his movements and use it later in someone else's case.

To observe:

Issues. Reactions. Reasons. Causes of issues. Modulations. Reasoning.

Observation:

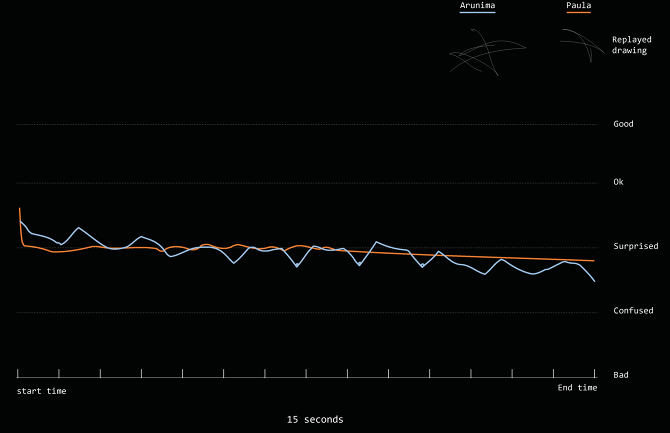

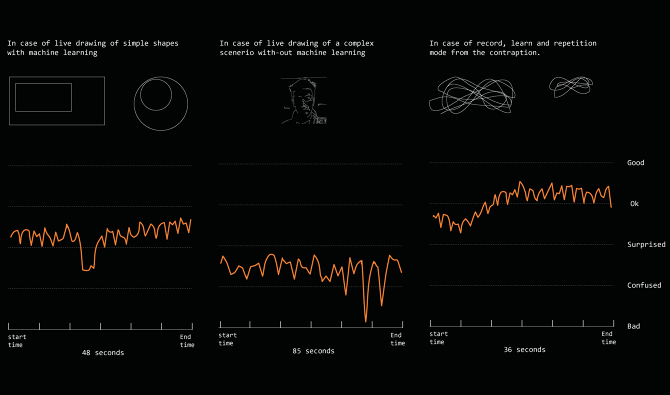

Myogram data extraction and mapping

Qualitative observation and inference

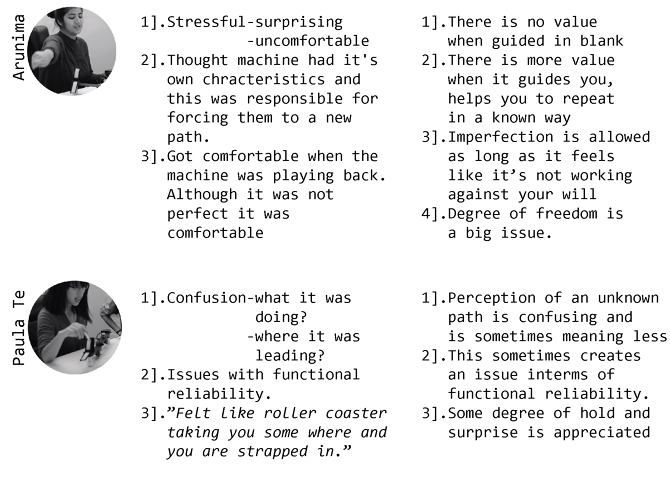

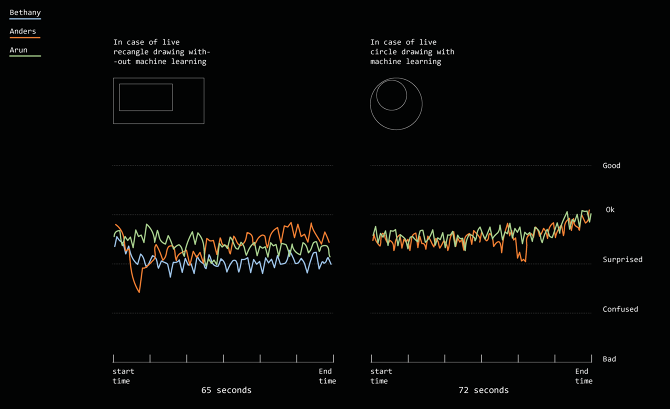

Experiment Two:

Same objective, may be different perspectives - sense of performance – scale and paths. Semi-Capability of machine to work with subject.

Scenario:

The machine is able to draw a computer generated path of a simple shape. The person is also supposed to draw the same shape. But they both might have different perspective of the scale of the shape, of size. The person has to put his hand inside the machine and draw along the same shape as they both are directed to do. The shape would be basic shapes like a rectangle or a circle. In the later phase the machine also takes into consideration of how the person wants to draw(making the best fit path from the computer generated path and path calculated from the person's movements)

To observe:

Negotiation. Compliment. Improvisation. Appreciation. Rejection. Reaction. Dilemma. Risking.

Observation:

Myogram data extraction and mapping

Qualitative observation and inference

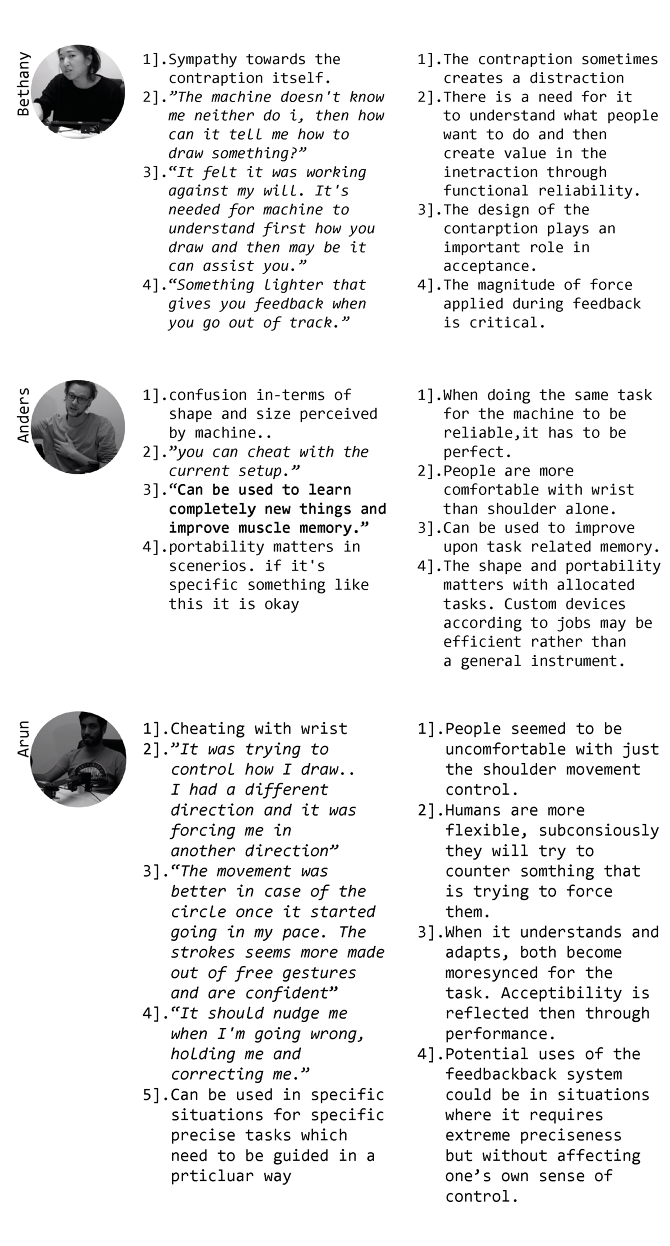

Experiment Three:

Same objective, may be different perspectives - sense of performance – scale and paths. Semi-Capability of machine to work with subject.

Scenario:

All the three subsets of the experiments would now be performed with a single subject to watch his behaviours and thoughts on the transitions and behaviours of the machine. Additionally now the machine is equipped to approximate an average force based on the machine’s vision and the person’s movement. Although it is very crude, it gives an extra opportunity to examine all the three subsets and their transitions.

To observe: Negotiation. Compliment. Improvisation. Appreciation. Rejection. Reaction. Dilemma. Risking.

Observation:

Myogram data extraction and mapping

Qualitative observation and inference

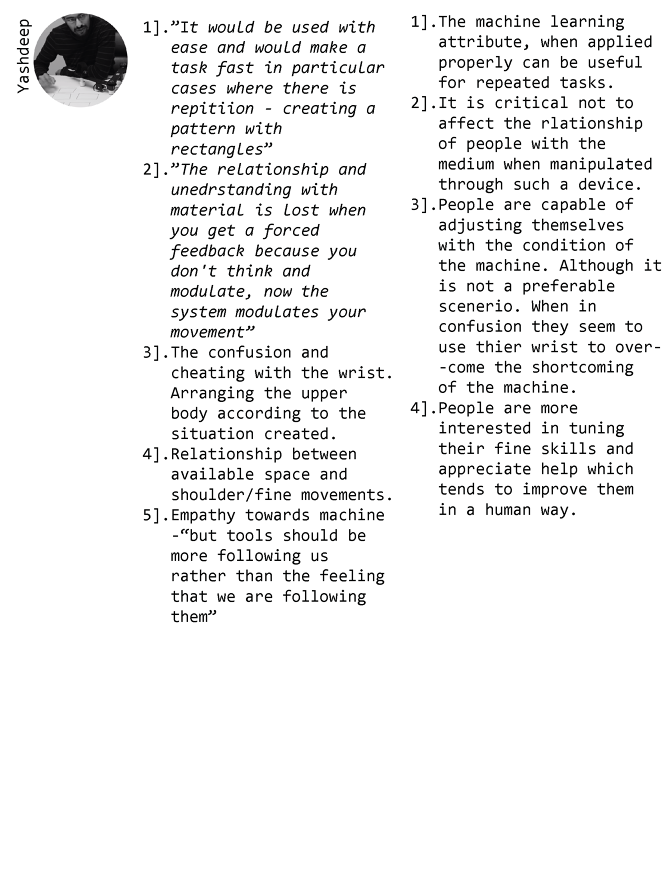

Few conclusions:

In guidance based tasks, there is more value if an external Forced Feedback based system communicates that it understands one and then helps in performing that task, in a complementary fashion. Or else it causes sometimes distraction through it's force. When acceptance of such guiding systems occur, a proper synchrony is achieved between both human and the machine which is often reflected through the quality and quantity of tasks and the degree of ease with which the tasks are performed.

In the experiments, the dilemma of controlling agency and distribution of control became as an apparent dissolution. Some acts reflected the fact that at tensed conditions, people prefer to take control over the machine. The allocation and sharing of control between man and machine has to be versatile so that the sense of ownership and reliability is not much affected.

Degree of freedom is a big issue.. Design of contraption plays an important role. The portability, modularity i.e. the shape , size and fixture would depend greatly upon the context of tasks it would be designed for. For a certain specific task like practising typography it's no harm to be fixed in nature(given it also provides quality degrees of freedom). For other examples it could be portable.

There is a potential threat of disrupting the relationship between people and their medium during a task. It greatly depends and thus has to be modulated by the magnitude of force and the fashion of application of the force.

These are few of the current observation that I hope would help us guide a bit better interfaces when Forced Feedback system is under consideration with the activity associated Although these are not complete finished, or the only results that could exist. The subject purely needs some more scrutiny ..

Special Thanks to David Gauthier

© Saurabh Datta