Save to My DOJO

I was recently toying with the idea of setting up a FreeNAS virtual machine on my ESXi 6.5 host. This would give me an alternative shared storage resource to add to my testing environment. With that in mind, I sat down and started looking around for recommendations and what not, on how to accomplish the task. Many of the posts I came across, recommended to go for passthrough, or passthru, technically known as DirectPath I/O. My original thought was to use RDM or a plain old vmdk and attach it to the FreeNAS VM.

The problem with the latter solution is mainly one of performance regardless of this being a testing environment. With DirectPath enabled, I can give the FreeNAS VM direct access to a couple of unused drives installed on the host. So, without giving it further thought, I went ahead and enabled pass-through on the storage controller on my testing ESXi host.

And here comes the big disclaimer. DO NOT DO THIS ON A LIVE SYSTEM. You have been warned!

This post exists for the sole reason of helping some poor soul, out there, to recover from what turned out to be a silly oversight. More to come on this.

What went wrong?

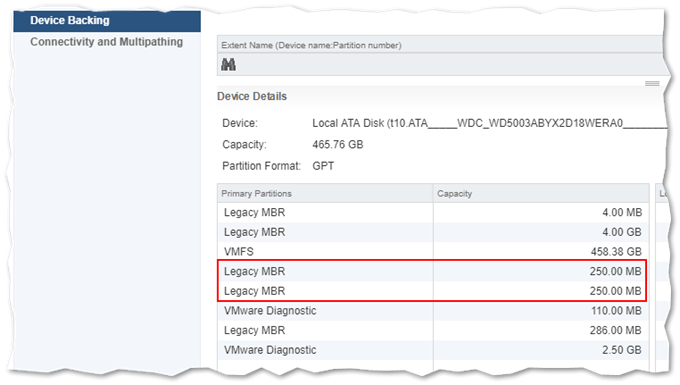

The physical host I chose for my grandiose plan, has a single IBEX SATA controller with 3 disks attached to it. Nothing fancy. It’s just software emulated RAID which ESXi doesn’t even recognize hence the non-utilized drives. I’m only using one drive which I’ve also used to install ESXi on. Remember, this is a testing environment. On this one drive, there’s also the default local datastore and a couple of VMs.

The drive, by default, is split into a number of partitions during the ESXi installation. Two of the partitions, called bootbank and altbootbank, hold the ESXi binaries that are loaded into memory during the booting process. I’ll come back to this later.

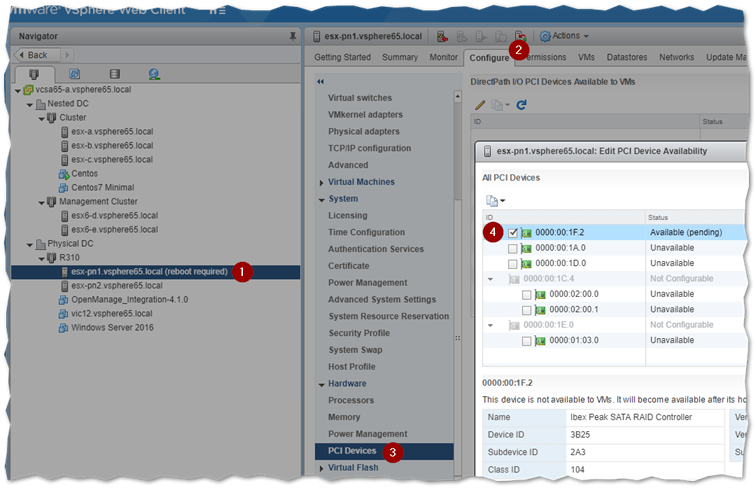

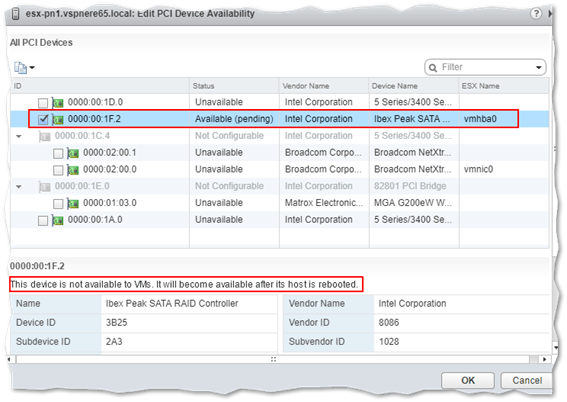

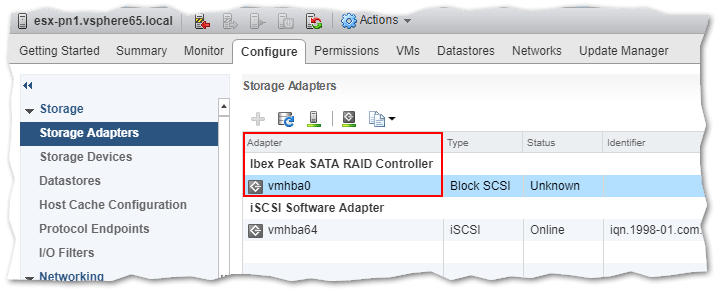

Back to the business of enabling DirectPath I/O on a PCI card such as a storage controller. In the next screenshot, I’ve highlighted the ESXi host in question and labeled the steps taken to enable DirectPath for a PCI device. This is enabled by simply ticking a box next to the device name. In this case, I enabled pass-through on the Ibex Peak SATA controller. Keep in mind that this is my one and only storage controller.

After enabling DirectPath , you are reminded that the host must be rebooted for the change to take root.

Again, without giving it much thought, I rebooted the host. Five minutes later and the host was back on line. I proceeded to create the new VM for FreeNAS but I quickly found out that I no longer had a datastore where to create it. And it suddenly dawned on me! With DirectPath enabled, the hypervisor will no longer have access to the device. Darn!

And that’s when I ended up with a bunch of inaccessible VMs on a datastore that was no more.

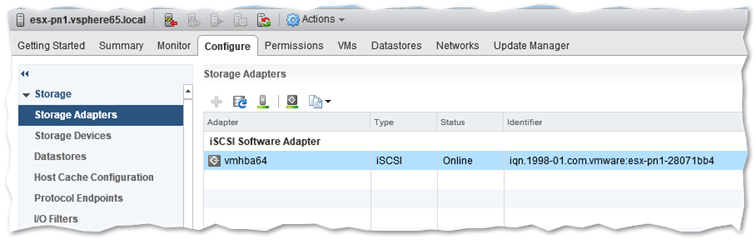

At this point, panic would have quickly ensued if this were a live system but thankfully it was not. I started digging around in vSphere client, and one of first things noticed was that the Ibex storage adapter was not listed under Storage Adapters irrespective of the number of rescans carried out.

The logical solution that first came to mind was, disable DirectPath I/O on the storage controller, reboot the host and restore everything to its former glory. So I did, rebooted the host and waited for another 5 minutes only to discover the host was still missing the datastore and storage adapter.

The Fix

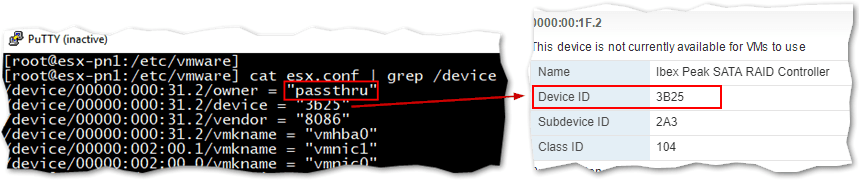

The first fix I found on the net is the one described in KB1022011. Basically, you SSH to ESXi and inspect /etc/vmware/esx.conf looking for the line where a device’s owner value is set to passthru. This must be changed to vmkernel to disable pass-through. To verify the device is the correct one, look at the /device value and compare it to the Device ID value in vSphere Web client; Configure -> PCI Devices -> Edit.

In this example, the device ID, for the storage controller, is 3b25 meaning I know I’m changing the correct one. You can see this in the next screenshot.

To replace the entry, use vi, substituting passthru with vmkernel, which is what I did. I rebooted the host a third time and much to my annoyance it was still missing the datastore. After some more digging around, I came across these two links; The wrong way to use VMware DirectPath and KB2043048.

The first link is what helped me fix the issue and also provided me with the inspiration to write this post, so kudos goes to the author.

So, why did the fix fail?

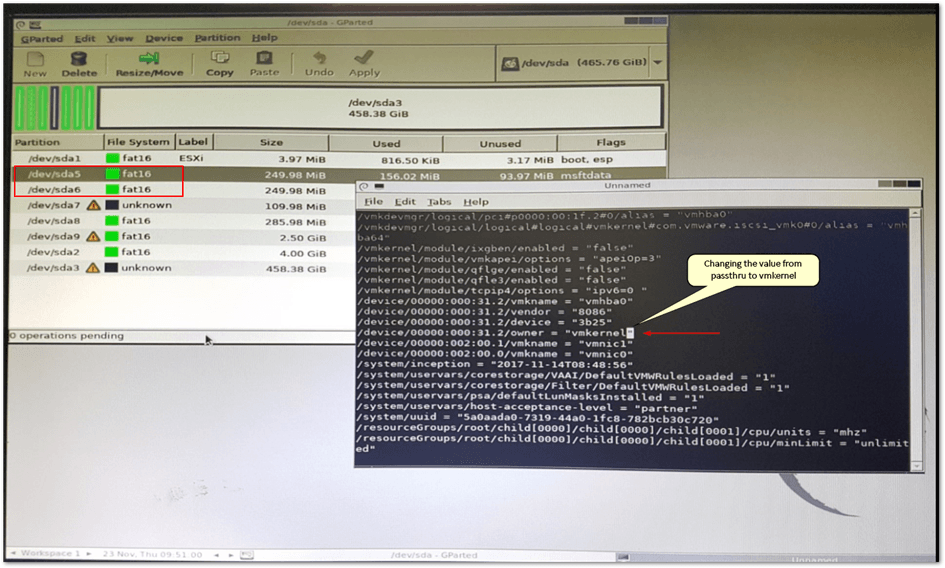

The simple explanation is that the changes done, given my setup, won’t persist because everything is running off volatile memory, in RAM that is. This means that once ESXi is rebooted, the change done to esx.conf is lost too and it’s back to square one. During the booting process, the ESXi binaries are loaded from partition sda5 (bootbank) or sda6 (altbootbank) depending on which one is currently marked active.

The host’s configuration files are automatically and periodically backed up via script to an archive called state.tgz which is copied to both partitions depending on which one is active at the time. This backup mechanism is what allows you to revert to a previous state using Shift-R while ESXi is booting. Unfortunately, in my case, the pass-through change was backed up as well and copied to both partitions or so it seemed.

ESXi has full visibility of the drive while booting up, which explains why it manages to in the first place despite the pass-through setting. It is only when esx.conf is read, that the pass-through setting is enforced/

Reverting to a previous state using Shift-R did not work for me, so I went down the GParted route.

The GParted Fix

GParted, GNOME Partition Editor, is a free Linux-based disk management utility that has saved my skin on countless occasions. You can create a GParted bootable USB stick by downloading the ISO from here and using Rufus.

As mentioned already, we need to change the passthru setting in the backed up esx.conf file found in the state.tgz archive. To do this, we must first uncompress state.tgz which contains a second archive, local.tgz.

Uncompressing local.tgz yields /etc folder where we find esx.conf. Once there, open esx.conf in an editor. Find and change the passthru entry and compress the /etc folder back to state.tgz.

Finally we overwrite the original state.tgz files under /sda5 and /sda6 and reboot the host.

Here’s the whole procedure in step form.

Step 1 – Boot the ESXi server off the GParted USB stick. Once it’s up, open a terminal window.

Step 2 – Run the following commands.

cd / mkdir /mnt/hd1 /mnt/hd2 /temp mount -t vfat /dev/sd5 /mnt/hd1 mount -t vfat /dev/sd6 /mnt/hd2

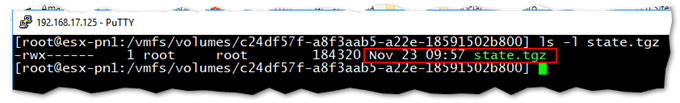

Step 3 – Determine which state.tgz file is most current. Run the following commands and look at the time stamps. You’ll need to uncompress the most recent one to keep using the current host configuration save for the changes we’ll be making.

ls -l /mnt/hd1/state.tgz ls -l /mnt/hd2/state.tgz

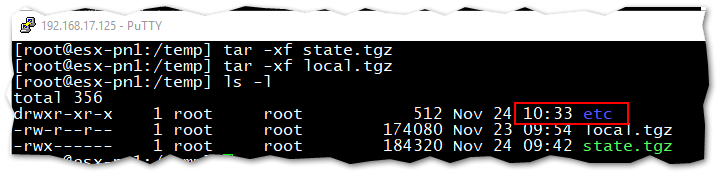

Here I’m showing the file while SSH’ed to the host

Step 4 – Copy the most current state.tgz to /temp. Here, I’ve assumed that the most current file is the one under /mnt/hd1. Yours could be different.

cp /mnt/hd1/state.tgz /temp

Step 5 – Uncompress state.tgz and the resulting local.tgz using tar. You should end up with an etc directory as shown.

cd /temp tar -xf state.tgz tar -xf local.tgz

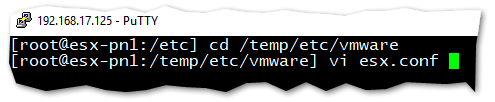

Step 6 – Navigate to /temp/etc/vmware and open esx.conf in vi.

- Press [/] and type passthru followed by Enter. This takes you to the line you need to edit assuming passthru has been enabled just for one device. If not, make sure the device ID matches as explained above.

- Press [Insert] and change the value to vmkernel.

- Press [ESC], [:] and type wq.

- Press [Enter] to save the changes.

Step 7 – Compress the archive and copy it back to /mnt/hd1 and /mnt/hd2.

cd /temp rm *.tgz tar -cf local.tgz etc tar -cf state.tgz local.tgz cp state.tgz /mnt/hd1 cp state.tgz /mnt/hd2

Reboot the host and your datastore and virtual machines should spring back to life. The procedure worked for me. The storage adapter, datastore and VM all came back to life with no apparent issues.

Conclusion

The moral of the story is to test, test and test before replicating something on a production system. Reading the manual and doing some research first goes a long way in avoiding similar situations. The correct solution of course, in this case, would have been to install a 2nd storage controller and enable DirectPath on it. The optimal solution would be to install FreeNas on proper hardware instead of virtualizing it, something I haven’t written about but might cover in a future post. In the meantime, have a look at the complete list of VMware posts on this blog for more interesting topics to learn from.

[the_ad id=”4738″][the_ad id=”4796″]

Not a DOJO Member yet?

Join thousands of other IT pros and receive a weekly roundup email with the latest content & updates!

43 thoughts on "Why Enabling DirectPath I/O on ESXi can be a Bad Idea"

Hi,

Good article for other people that might be having this problem, I’m glad you were able to fix it. I think the problem was not using exclusive passthrough. If you enable passthrough for the entire storage controller and then add it as a PCIe device instead of using Directpath IO, it works just fine. Of course, you can’t load ESXi with the storage controller anymore. I did this for years using my motherboard’s storage controller for FreeNAS and just ran ESXi off a USB drive. Now I have 3 servers I do it with, although I have started using FreeBSD instead of FreeNAS since it’s less bloated. All the machines boot ESXi from a completely different storage controller, generally USB, although one I boot ESXi off the internal SATA and passthrough an LSI SAS2008 raid controller. It’s very reliable if you just keep that premise in mind – the whole thing has to be for just one VM and you’re not doing anything with it until the VM boots up, at which point you can use NFS or iSCSI controlled by the VM to go back to ESXi for a datastore. Another option is just to use KVM as a hypervisor instead and install software RAID (ZFS, MDADM, etc.) on the host itself, since it’s running full-fledged Linux (or Omni-OS in the case of SmartOS). But although KVM has its advantages, it does not seem to perform quite as well as ESXi as a pure VM hypervisor…