Before I flip my proverbial switch to MLB, I wanted to share some reflections on a humbling 2018 fantasy football season.

As most of y’all know, I am a bit of an odd duck. Most fantasy football enthusiasts love season-long, DFS or both. I love building and maintaining in-season fantasy player projection systems. I like competing in the FantasyPros Accuracy contest better than season-long and DFS because it aligns better with that pursuit. Being almost 100% focused on running/improving the projection system (aka Pigskinonator) also aligns best for the needs of our subscribers so it is a win-win.

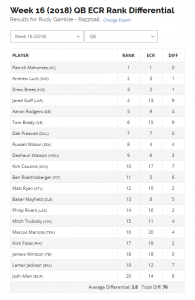

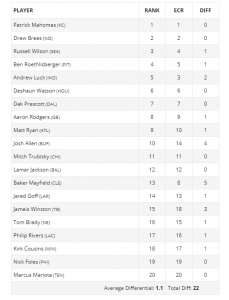

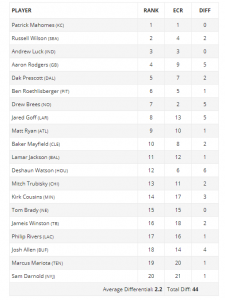

This was the 3rd year I competed in the FantasyPros NFL accuracy contest and there has been one constant throughout that time. I rank players further from ‘consensus’ than just about anyone. In 2016, I was the furthest from consensus (aka boldest). In 2017, I was the 2nd boldest. In 2018, I was the 4th boldest. The whole ‘boldest’ thing can be pretty obtuse so, to better illustrate it, see below for my Week 16 QB ranks vs consensus as well as those of 3 anonymous rankers that span a range from very close to relatively far from consensus. As you can see, ‘bold’ does not necessarily mean BONKERS. It generally means in line with consensus with a bunch of minor differences and a couple of major differences.

My objective has never been to be one of the boldest rankers. My overarching objective is to provide the most value to subscribers when ranking/projecting players. I do that by developing a player projection model and constantly refining it based on additional data/learnings. For NFL, I do weekly QA on the rankings and part of that process entails comparing vs ‘consensus’ ranks to determine whether outliers (guys I ranked much higher/lower vs consensus) are legitimate vs a ‘bug’ – e.g., something is not working right, the model needs tweaking, etc. For example, I discovered towards the end of the season that my weather data feed notes Rain as binary (either a chance or no chance) where it really is a projected percentage chance. My research on the impact on rain is BINARY as it either rained that game or did not. So all the rain factors were being applied whether there was a 5% chance or 95% chance of rain. I muted the impact of rain and am hopeful that my data feed will include percentages next season.

I would never intentionally build a model to be ‘bolder’. That does not make any sense. The goal is always to make it as accurate as possible. But if there is anything ‘bold’ in my approach, it is that if the projection appears legitimate, I publish the results and ignore the consensus opinion. I think this is how the minority of rankers who publish projections like myself operate whereas many that are doing manual ranking are influenced by varying degrees to the gravitational pull of the consensus ranks. Some experts are so close to consensus ranks that the only explanation is that they just use the consensus ranks and make a few minor tweaks – i.e., there is such little difference in QB projected points for QBs 6-20 that it is virtually impossible to not having a certain amount of random ranking differences vs consensus. But I know two other rankers who operate similar to me and their rankings are closer to consensus so my divergence from consensus is also driven by methodological factors as well.

I think there is significant value to my approach because so much of weekly season-long and DFS is finding the outlier – e.g., the WR primed for a big week who is still on waivers or the underpriced RB for a GPP. In addition, while I believe in the ‘wisdom of crowds’, I also think there is a groupthink/laziness factor that comes into play and I relish trying to be one step ahead of the consensus opinion on a player.

That said, this approach has its downside. The consensus ranks are not dumb. There are a lot of knowledgable fantasy football minds participating in the contest. It is smarter than the majority of ‘bold’ projections. If your #1 goal is to do well in the FantasyPros contest, the safer route is to hew close to the consensus rankings. This is exacerbated to some degree by FantasyPros’ methodology which penalized boldness by grading an expert on all players that 1) finish in the top x (20 for QB, 35 for RB, 40 for WR, 15 for TE), 2) the players the expert ranks in the top x, and 3) the players the consensus ranked in the top x. So let’s say I rank the consensus WR30 and WR50 at WR50 and WR30 and both get zero points. That SHOULD be a draw. But it is not. The expert who picked like consensus will only be graded on the WR30 guy (based on criteria #2 and #3) whereas I would be graded on both WRs (one because of criteria #2, one because of criteria #3). Remember how mad Football Analyst Twitter went when the Giants took Saquon Barkley at #2 instead of a QB/OT/DL/trading down? This makes me madder than that.

The correlation between Accuracy and Boldness from 2016-2018 ranged from -0.4 to -0.62. A negative 1.0 would be the maximum correlation (basically the boldest ranker is the least accurate) and zero would be no relationship between the two. In addition, of the 72 top 20th percentile finishes in the past 3 years, only four were in the top 20th percentile of ‘boldness’ (2016 – Jason Moore of The Fantasy Footballers at 83rd percentile accuracy / 94th percentile boldness, 2017 – Rudy Gamble at 96th/99th, Staff Rankings Daily Roto at 81st/93rd, 2018 – Dalton Del Don of Yahoo! at 94th/91st). The average boldness percentile for the top 20 percent finishers averaged around the 25th percentile.

(I’ve posted the 2016-2018 standings and boldness percentiles here. I found percentile rank is the easiest way to compare vs accuracy but it is worth noting that the extent of the boldness spikes up in the top decile. The 97th percentile equates to each player being about 4.5 picks from consensus, the 90th percentile is 3.3 picks from consensus, the 80th percentile is 2.7 picks, the 50th percentile is 2 picks, and the 10th percentile is 1.3 picks).

Some other interesting observations before I talk about my 2018 performance:

- There is increasing consensus amongst experts. The median difference per week between expert and consensus rank decreased in all four positions (QB, RB, WR, TE) between 2016 to 2017 and between 2017 to 2018.

- While tough to quantify, I think the consensus opinion is getting a little smarter each year. Sean Koerner (The Action Network) three-peated from 2015-2017 and, similar to me, is less influenced by consensus opinion as they rank based on their own projected values. His Boldness percentile from 2016-2018 has gone from 54th to 42nd to 38th. When you combine that with the fact that the median difference per week vs consensus has gone DOWN during the same time period (so his percentiles are not driven by an influx of bold rankers), I think it likely points to slight improvements in the accuracy of the consensus ranks.

- Experts tend to be consistent year over year in their boldness – there is a correlation of .77 for 2016 vs 2017 and 0.64 for 2017 vs 2018 in Boldness percentile. Some interesting shifts over the past three years are:

- All three Fantasy Footballers (Andy, Mike, Jason) went from ‘bold’ in 2016 (83rd-94th percentile) to close to consensus (18th-27th percentile). All three were 12th-20th percentile in 2018. All three finished in the top 20 for Accuracy in 2017 and 2018.

- Robert Waziak of Pyromaniac (who finished 7th in 2017 and 2nd in 2018) was very bold in 2016 (95th percentile) but has been around 20th percentile the last two years.

- NumberFire’s site projections went from 20th percentile in 2016 to 95th percentile in 2017-2018.

- I find it weirdly hilarious that the mainstream Yahoo and CBS experts are uniformly bold (all 8 for 2018 were in top half for boldness) while the six experts at Pro Football Focus (which I am a big fan of because their research/grading uncovers contrarian insights) averaged 17th percentile in boldness with 3 in the bottom decile (bottom 10th percentile).

I guess that is enough stalling. I finished 97th out of 122 in overall accuracy (ouch). It is impossible to sugar coat it. I’m super disappointed. It is still ‘ahead of the curve’ for being the 4th boldest from consensus but last year’s 5th place finish had me convinced I had a good shot at winning the conteset. It’s going to require a summer of digging/research to retool for 2019 but here are some highlights/lowlights/insights:

Highlights

- I finished 9th in RB accuracy after finishing 1st in 2017. That is comforting.

- I finished 2nd in Kicker and DST (both to Sean Koerner) after finishing 4th and 9th respectively in 2017. Too bad those categories don’t count for the overall contest!

Lowlights

- I finished 102nd in QB, 118th in WR, and 114th in TE. Just brutal.

Insights

- This is less an insight and more an unfounded observation but there was something weird about 2018. Put my performance aside as I do not have a long track record. Sean Koerner won 2015-2017. He finished in 14th. John Paulsen is a perennial top 10. He finished 12th. DailyRoto.com has sharp people and their staff rankings finished 22nd in 2017 and 90th in 2018. Andy Behrens was in the top 10 in the middle of the season. Weird.

- I expect more week-to-week extremes given my boldness. I am going to get some top 10 weeks. I am also going to have really bad weeks. Last year, I had 5 top 10 weeks (4 1st place, 1 2nd place). This year I had 4 top 10 weeks (3rd, 5th, 6th, 10th). The big difference was that last year I had only two weeks where I fell outside the top 100 whereas this year it was six. It’s probable that variance/luck was on my side in 2017 and hurt me in 2018. I’d rather focus on the part I can control which is looking for areas of improvement.

- I’m more concerned on QB/WR vs TE as TE is flukier. There were two weeks where having Zach Ertz 3rd vs 2nd cost me a ridiculous amount of points. If I had him 2nd those two weeks, I’m in 70th. If the 1st place team in TE ranked Ertz 3rd vs 2nd in those two weeks, they would have fallen to 50th place. That is ridiculous.

- The QB/WR performance is a stomach punch. I feel like the model is smarter than it was in 2017 when I finished 3rd in QB in 2017 and 34th in WR. While I made improvements to the model (as I always do) throughout the year, I think a fresh perspective this summer will help identify improvement areas.

Anyway, thanks to FantasyPros for running the contest. Congratulations to Matthew Hill of DataForceFF.com on winning the 2018 contest. Congrats to my pal Dalton for joining me in the 90/90 club in accuracy/boldness.