- Portland City Council has passed one of the most severe facial recognition bans in the U.S.

- Along with prohibiting city bureaus from using the technology, the ordinance will also apply to private companies and governments—not private citizens.

- Amid the nationwide Black Lives Matter protests, surveillance has become a renewed topic of interest as facial recognition software often exhibits racial bias.

In a landmark move, Portland, Oregon, has enacted the nation's most stringent, sweeping facial recognition ban. On Wednesday, city council unanimously passed two new ordinances that prohibit both private companies and city bureaus from using the surveillance software "in places of public accommodation."

The ban on government agency use goes into effect immediately, while the ban on private use goes into effect on January 1, 2021. Private citizens are not subject to the rules, so biometric technology, like Apple Face ID, is still permissible.

➡ Don't let tech trick you. Master your digital world with best-in-class explainers and unlimited access to Pop Mech, starting NOW.

"Portland residents and visitors should enjoy access to public spaces with a reasonable assumption of anonymity and personal privacy," the municipal legislation states, in part. "This is true for particularly those who have been historically over surveilled and experience surveillance technologies differently."

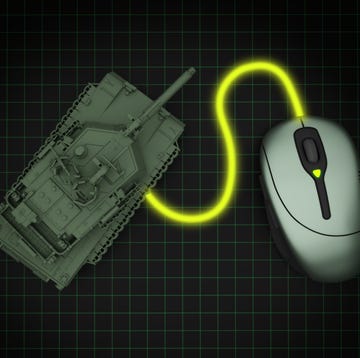

From a practical standpoint, that means government agencies, like the Portland Bureau of Police, cannot use facial recognition software for videos from body cameras, dash cams, or any other form of surveillance equipment. And due to the second ordinance, private entities like hotels, 24-hour convenience stores, and even airports (Delta uses facial recognition to check-in passengers) cannot employ the technology.

That last bit is significant because it represents a significant leap forward from existing facial recognition bans in other U.S. cities, which are limited in scope. Boston, San Francisco, and Oakland, California, all have bans in place, but they only pertain to the likes of government bureaus.

"[Portland]...takes the ban a huge step further by also prohibiting its use by private entities," Robert Cattanach—a partner at Minneapolis, Minnesota-based law firm Dorsey & Whitney, who specializes in cybersecurity—wrote in a statement sent to Popular Mechanics. "The indiscriminate use and misuse of this technology is well known and in many ways frightening."

Cattanach points to the "non-transparent, nonconsensual" use of facial recognition technology in commercial settings as one example. In Portland, that narrative has already come to life: In February, The Oregonian reported that a chain of convenience stores in Northeast Portland were using facial recognition-powered security cameras to lock and unlock doors and to keep tabs on shoplifters.

A similar scenario has played out across the U.S. during the slate of Black Lives Matter protests that broke out in response to the police killing of George Floyd back in May, reigniting concerns about facial recognition tools.

There is already mounting evidence that face data could be used to identify and detain protesters. In May, Miami police used the controversial Clearview AI software to track down a woman who allegedly threw rocks at an officer during a protest. In another case, authorities charged a Philadelphia man with trashing cop cars after using facial recognition software from the state Department of Transportation to match his face with images of protesters posted to social media.

Portland City Commissioner Jo Ann Hardesty pointed to this potential misuse of the technology—and subsequent infringement of civil liberties—in a statement posted on Twitter, just before city council passed the law.

"With these concerning reports of state surveillance of Black Lives Matter activists and the use of facial recognition technology to aid in the surveillance, it is especially important that Portland prohibits its bureaus from using this technology," Hardesty said. "No one should be unfairly thrust into the criminal justice system because the tech algorithm misidentified an innocent person."

The Portland Bureau of Police declined to comment for this story.

It's true that facial recognition software can lead to wrongful convictions. In January, Robert Williams of Detroit spent over 24 hours in police custody after a facial recognition system mistakenly matched his face with that of another Black man, caught in surveillance footage during a shoplifting spree. The American Civil Liberties Union (ACLU) said it was the first case of its kind in the U.S.

This is likely the result of algorithmic bias, or a set of repeatable computer errors that people introduce into a system, usually due to poor or lacking data. In the case of facial recognition software, the technology is far more likely to correctly identify white faces than Black faces.

In July 2018, the ACLU conducted a test with one type of facial recognition software, Amazon's Rekognition, and found it incorrectly matched 28 members of Congress with other people who were arrested for a crime. The ACLU said it used the publicly available tool to conduct this testing for just $12.33.

Since then, Amazon has put a one-year moratorium on the software. However, the e-commerce company did spend $24,000 on lobbying efforts against the Portland ordinances, according to Fight For the Future, a Worcester, Massachusetts-based digital rights nonprofit.

Amazon does not publicly disclose all of its Rekognition customers, so it's not clear if Portland police ever contracted with the firm. However, the Washington County Sheriff's Office, just West of Portland, does use it.

Critics argue that a blanket ban on facial recognition is not the most nimble move. The convenience store owners in Portland who use facial recognition to catch shoplifters, for instance, see the tech as a form of deterrent, and as a way to protect staff. There are still other specific use cases where the software could do more good than harm, according to Cattanach.

"Significant efficiencies and convenience with consensual screening at airports; improved security in public settings to identify known terrorists; and, invaluable assistance to law enforcement in appropriate emergency settings [are potential benefits]," he says.

Lia Holland—an activist with Fight for the Future, who lives in Portland—wholly disagrees.

"Even seemingly innocuous uses of facial recognition, like speeding up lines or using your face as a form of payment, normalize the act of handing over sensitive biometric information and pose a serious threat to security and civil liberties," she said in a statement sent to Popular Mechanics.

"Imagine being misidentified as a shoplifter at Rite Aid and when you go to pick up your prescription, the doors won't open. No court, no recourse. Just an algorithm purposely made too complex to scrutinize."

Only time will tell how the public, municipalities, and private firms will react to the new ordinances, but one question looms at large: will other cities follow suit? And if so, will that be enough to push developers toward building more equitable systems?