At the beginning of the day Riina Vuorikari, JRC-IPTS provide a description about the goals of the project.

Why is JRC-IPTS organising this? JRCs are the Joint Research Centre of the European Commission, the in-house research centres. The IPTS centre is The Institute for Prospective Technological Studies.

The project is intended to provide evidence based scientific and technical support throughout the policy cycle at the European level.

There are a set of lightning presentations which Doug Clow did an excellent job of live blogging at the following post in the section called European Lightning presentations. Each presenter was an expert in the field of learning analytics and presenting briefly about their respective work.

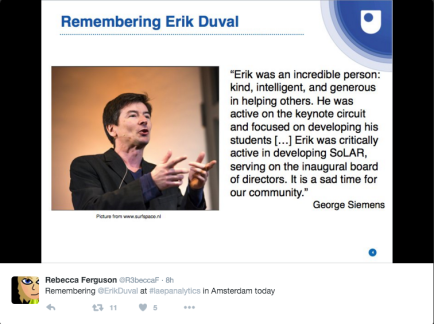

Rebecca Ferguson welcomes everyone to the event. At the start the community takes a moment to acknowledge the loss of Erik Duval. Doug Clow provides a nice tribute to Erik where we remembers how Erik put an emphasis on family life while managing a demanding academic career. Erik will be a missed member of the Learning Analytics community

Rebecca then proceeds to introduce the Learning Analytics European Policy (LAEP) project. She talks about the three research questions for the project

- What’s the state of the art in Learning Analytics?

- What are the prospects for implementation?

- What’s the potential for European policy?

Adam Cooper

From these research questions the project took on work. Adam Cooper put together a Glossary of Terms so that people new to the field could become familiar with the work. Rebecca requested that anyone who has input on missing terms or suggested improvements for definitions to please provide feedback on the Cloud Works site.

Then a literature review was conducted by Adam Cooper on Learning Analytics with a focus on implementation. Rebecca mentions that there have been many literature reviews on the topic, but this is the only one with a focus on implementation.

Then the project created a set of inventories of policy, practice, and tools. Andrew Brasher provides an overview of the 14 policies, 13 practices, and 19 tools covered in the inventory.

There are six case studies that are covered by Jenna Mittelmeier & Garron Hillaire. The presentation outlines that these cases reflect a variety of company types and the key take aways are not a meant to be a checklist of how make effective policies, but rather facets of the challenges policy makers face when working on Learning Analytics Policies. After introducing the organizations of each of the cases a slide is shown that illustrates key take aways for policy makers

Then workshop where we worked as groups to develop 6 positions and rotated discussions in order to develop and refine the ideas.

Then one person from each group presented on the groups idea and the rest of the group rotated around the room to hear ideas from the other groups.

The goal was to prepare the person to provide a position of what the future of learning analytics will become. then we got the entire group in one large circle. The people who had just practiced pitching their ideas then was asked to presented the refined idea to the entire group.

As people presented their ideas to the entire group anyone could challenge the ideas to continue to refine and improve the concepts. It was fun to hear people present and defend the ideas of the workshop