Network pricing strategies: tiered vs spot vs flat

This is intended to be a fairly exhaustive overview of the various options available to a next generation telecommunications carrier for pricing its products and services into a market. Managing pricing is increasingly important as telco operators around the world consolidate their multiple (legacy) infrastructure platforms into a single (next-generation network) platform (typically utilising IP/Ethernet/MPLS/D-WDM). I’ll also highlight some of the various strengths, weaknesses, opportunities and threats (SWOT analysis) associated with each approach. I intend to keep this analysis at a reasonably high level to make it more easily approachable and digestible. In reality, I could quite easily write an entire textbook on this subject alone (any publishers interested?). Thus I will not be including all the technical proofs or justifications for each condition or approach here (you can pressure me directly for those if you really desire them). So, without further ado, let’s embark upon our quest.

First, some assumptions:

- We are considering pricing for an incumbent or natural monopoly carrier (not a competitive carrier, as they have their own specific pricing and market segmentation strategies).

- Thus we assume a large, fixed and diverse footprint (as opposed to pure targeted demand driven growth). There is little scope to only build supply infrastructure in direct response to consumer demand.

We will also define four pillars for measuring success across our various models. These four pillars include providing support for social welfare (ensuring services are accessible to everyone in terms of cost), protection of long-term economic sustainability (ensuring sufficient profit margins to support ongoing maintenance and investment), support for premium performance (ensuring services are acceptable for top-end consumers, business) and fostering an open competitive marketplace (ensuring that vibrant, sustained and healthy competition evolves around the incumbent).

Natural usage patterns:

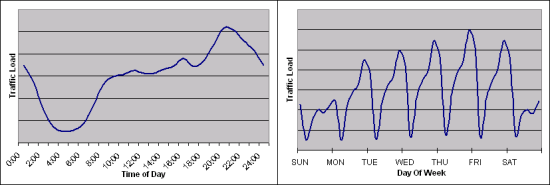

Natural traffic demands have a distinct and reasonably well understood pattern which is repeated over a number of cycles. These include a daily cycle (driven by diurnal cycles), a weekly cycle (which incorporates common rest-days, week-ends) and a yearly cycle (which incorporates various seasonal and societal effects, like public holidays). The diagrams below highlight some of the common characteristics often seen in usage graphs for telecommunications traffic. These sorts of usage patterns crop up in many human endeavours, including for example US residential broadband usage, Japan residential FTTH usage, UK road traffic and even electrical energy demand and supply (flash required). If it was graphed somewhere, I’m sure a similar traffic pattern would emerge from plotting demand for caffè latte’s or cups of tea served over the day, just with different peaks!

The above are healthy examples of natural traffic demands. The graphs below however, are classic examples of congested traffic behaviours. If any system exhibits this sort of flat-lined, or smoothed behaviour, then that system is most likely being artificially constrained by contention occurring somewhere. This contention will impact the efficiency of the system and performance experienced by all users affected (think traffic jams, brown-outs and packet loss/delay). The aim is usually to avoid, as much as reasonable and possible, such contention from occurring; or if it does occur, to ensure that its effects on premium services are minimised (think transit lanes, multi-phase power supply and priority scheduling).

The approaches:

The basic order I’ve adopted for covering the approaches is as follows. First we’ll take a look at the optimal target. However, implementing the optimal target architecture is not really feasible just yet, as the market simply isn’t ready (lacks maturity). Never the less, it is always useful to know where you ultimately want to be, even if you never make it there. Likewise, it is just as important to know where you absolutely do not want to be. Thus we’ll then look at the various solutions that don’t work, in some way or another, as they miss one of our four pillars. Lastly, we’ll look at the solutions that can work; leaving what I consider to be the optimal, workable solution till last. This final solution not only represents the best current practice option, it also forms an excellent stepping stone towards the known optimal solution. Once armed with knowledge about all the options and their implications, then making rational and educated choices becomes fairly straightforward.

The optimal solution:

The optimal solution is to enable true commodity spot-pricing for network bandwidth. Spot pricing is very similar in concept to having a continuous and repeated auction which results in end-users only paying as much for the commodity as the market requires. Spot pricing is directly influenced by dynamic changes in supply and demand. This results in ever changing equilibriums of price, utilisation and availability. Unsurprisingly, there are a number of approaches for implementing and supporting spot-pricing. Some real-life examples include:

- Google Adwords(tm): It has been almost a decade now since Google revolutionised the online advertising business by adopting what is called the “generalized second price” auction mechanism. This mechanism is used to both determine the placement location/frequency of advertising impressions as well as pricing the click-through or capture resulting from an impression. This approach has also been adopted by other major online advertisers such as Yahoo. It implements a real-time bidding war between advertisers, such that each advertisement is billed the spot-price based on dynamic demand and value-perceptions.

- Amazon EC2(tm): Just recently, Amazon announced a new approach for selling excess capacity on their EC2 platform, based on market spot-pricing. This approach also implements a “generalized second price” auction. Users can bid competitively for access to any dynamic spare capacity and are allocated resources based on their respective bids relative to excess available capacity.

- Electrical Supply Market: This industry is in the early stages of possibly adopting a spot-pricing mechanism to wholesale electrical supply. Some markets players such as PJM have already begun to leverage spot-pricing mechanisms.

- Free markets: Finally I’ll mention that many free trade markets (including FOREX, shares, derivatives and commodities), as well as many auction clearing houses (including well known auction sites, which support English or Dutch auction approaches) are all pseudo-examples of a true spot-priced market. The major difference between these spot-priced markets and the others, is that they are trading non-perishable goods (i.e. pricing stuff you can buy now and sell later; or vice-versa, sell now and buy later, aka short-selling). These latter examples also incorporate a complex risk exposure assessment into the value proposition.

A common theme amongst all of these approaches (with the exception of the last one) is a recognition that the resources are time-sensitive (i.e. they must be used at the time of purchase or forfeited), that the suppliers are able to advertise resource availability dynamically and thus the consumers are also able to vary their respective bids for resources just as dynamically. In ideal markets, a continuous equilibrium is maintained such that the market consumers always pay a competitive price for resources and the excess capacity of providers is always efficiently utilised with maximum revenue extraction. This can result in achieving a classic nash-equilibrium, where everyone wins.

One of the major concerns with spot-pricing, is the lack of certainty around price expectations. Seeing as how the prices are dynamically determined by the open market participants, it is difficult to predict what the prices will be in advance. That said, there are tools and models available which do a reasonable job. On the other hand, one of the major benefits of spot-pricing though, is that the price everyone pays, is the minimum price that should have been necessary in order to secure the resource (at that particular time). This is one of the very desirable properties of most auction mechanisms and is exhibited by the generalized second price auction approaches.

There are a few approaches available for implementing spot-pricing for telecommunications data. These include:

- A generalized auction on a per-packet basis. In this approach, every packet would be marked with a maximum-intent-to-pay by the generator of the packet. The network operators could than configure their network to dynamically adjust a parameter the required-minimum-to-pay directly based on the network utilisation (this simulates an English Auction). If the network is operating below threshold capacity, then packets are transported for the minimum price (possibly even for free). As network demand begins to exceed available capacity thresholds, the network dials up the required-minimum-to-pay and then queues, schedules and accounts packets whose maximum-intent-to-pay is at least the current minimum price (at the minimum price). As demand drops, the network can then dial down the required-minimum-to-pay, thus increasing access and utilisation. This can be done in real-time to ensure some target performance threshold is maintained. This can even be done in a tiered-performance environment. Other, alternative approaches to weighting and charging packets could also be applied, some could form a generalized second-price auction, others could simulate a Vickery-Clarke-Groves auction and even others could be experimentally developed–this would be a very rich area for experimentation and research.

- The creation of a spot-priced open market or reserve market. This approach emulates existing wholesale market practices for trading commodities, existing domestic share markets and even includes the traditional PSTN environment where wholesale trading of bulk call-minutes occurs. In this market, producers would make available bandwidth (subject to various constraints such as peak utilisation, time of use etc) and consumers would bid for allocation of bandwidth which upon winning, they could then proceed to use as per stated conditions associated with the resource.

As can be seen, these approaches require systems and capabilities that are not yet available and a maturity of thinking that the telecommunications industry and its customers have not yet reached. Mind you, this would be an ideal experiment to run on Google’s new Fiber-to-Communities platform. In these systems, a base level of packets could always be carried for (effectively) free, and only when contention for bandwidth begins to occur, would consumer packets begin to competitively out-bid each other for access to the remaining bandwidth, until all bandwidth is exhausted and then any packets failing to meet the current market price thresholds would simply be discarded (refused carriage). I would be very interested in experimenting with some real-time user/application policies on such a network.

The solutions that fail:

Ok, so that was the optimal approach, now what about the ones that simply fail to work, in some way or another. The first such approach is flat-rate-pricing. Flat-rate-pricing is very simple and thus, is often popular with end-users. It mostly appears when services are either new, highly competitive or simply not well understood. As services mature and increase in usage, and as competitive forces establish an equilibrium or balance–then usage-based pricing tends to dominate (until the service becomes true legacy). This maturing is happening now, worldwide, with Internet access and data services, both fixed and mobile. There is a real simple reason for this; it’s called the ‘tragedy of the commons‘. In telecommunications, the maximum available bandwidth within a providers aggregation or interconnect network is a limited and finite resource. It always has been and always will be, especially during periods of peak contention/utilisation (often referred to as the ‘busy hour’). Although it is argued that this resource can be increased easily and arbitrarily, doing so always comes at a cost to the provider and that cost must be passed on to subscribers in some way or another, in order to be long-term sustainable. Unfortunately, aggressive competition often sees capacity increases being coupled with corresponding price decreases. This may be a very effective way to enter a market and acquire market share, however as highlighted above, it is not long-term sustainable.

Without going into detailed analysis, it is relatively easy to see why implementing flat-rate-pricing as a strategy across all consumers cannot be made to scale. Let’s assume we have an aggregation trunk, which all users in a common geographical area must share. Also assume that all users are purchasing a competitive, flat-rate service package. Now admittedly, some users will be relatively light in their usage and only a small percentage will be relatively heavy (actually, many networks have shown that sometimes less than 5% of the user population can account for over 90% of all traffic in extreme cases, however it is usually closer to the 80:20 rule). At some point in time, users will be causing aggregate peak bandwidth to approach the available bandwidth, however this is where the tragedy of the commons effect kicks in. Up to this point, the service performance is excellent for all users, it’s just some users are gaining substantially more value out of the system by abusing the resources as much as they can. The light users are in effect subsidising the heavy users and hence they also become incentivised to use the service more. This will inevitably push the aggregate trunk into congestion. Now the carrier has a few choices, each of which will incur their own implications on the future.

| Option Chosen | Long-term Implication | Pillar Compromised |

| Increase the trunk capacity and absorb the cost | Excellent service performance is maintained, end-users continue to pay existing fees, usage will continue to grow, profit margins of carrier impacted negatively. | Economic Sustainability |

| Increase trunk capacity, increase service price | Excellent service performance is maintained, end-users are required to pay increased costs or terminate service, profit margins of carrier are mostly maintained. | Social Welfare |

| Take no action | Service performance degrades due to increased congestion, end-users continue to pay existing fees, usage will continue to grow, profit margins of carrier are mostly maintained | Premium Performance |

| Introduce Acceptable Use Limits (CAPS) | This is really just a medium-term stopgap measure only. User demands and applications will continue to grow, thus increasing bandwidth demands even further. To remain competitive, carriers are forced to increase the cap limits over time, yet this merely prolongs the inevitable–a trunk upgrade. | Economic Sustainability |

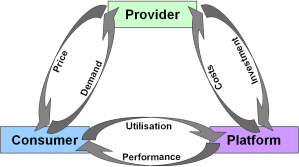

This complex relationship between providers, consumers, platforms and pricing versus demand versus performance is all summarised quite succinctly in the diagram below. I do not intend to discuss these relationships in any further detail here, however most should be fairly obvious and the diagram helps those wishing to explore the interactions a little further.

Finally, I should point out that flat-rate pricing is even worse when applied in a wholesale setting, where it breaks down much faster. You see, wholesale access seekers actively work to leverage the resources of the provider to maximise their own profits and revenue. Thus in conclusion, it is clear that simple fixed rate pricing, based on concepts like peak capacity or peak throughout (the traditional all-you-can-eat approaches) just do not work!

So, is there a way to address some of these shortcomings by for instance, introducing bandwidth quotas (threshold caps with rate-limiting or excess charging) or by becoming creative with accounting (on-net versus off-net and peak versus off-peak traffic)? In short, the answer is no. Whilst these approaches, even if applied with very creative, tiered thresholds, actually can work well with end-users, they really do not scale well with aggregate wholesale access seekers. Also, there is a fundamental failing of such systems. They do not distinguish between different applications generating different traffic streams well. They completely fail to recognise the difference between bandwidth consumed and actual utility/value. For instance, not all bandwidth intensive applications represent premium valued services, likewise, some premium value services may actually consume very little bandwidth. Worse, this is true even with traffic from the same source, for instance Youtube, where it fails to recognise that not all video streams represent the same value proposition to the end-user (think individual content versus premium content). Likewise, with voice over IP, not all calls represent the same value proposition to each end-user, all the time. This truth holds for all traffic streams! In short, this is a very blunt sledgehammer approach for what really demands the surgical precision of a scalpel. Unfortunately, surgical precision also isn’t the only answer as our next candidate demonstrates. Despite support for high precision targeting, it too is a general failure when used in pricing data services.

Session based charging usually involves identifying individual sessions and connections between end-users and charging for them based on an assumption of utility value. Whilst I have nothing against session based charging used in appropriate circumstances (such as for video-on-demand, business grade SIP services or online-gaming), I do take great offense at providers attempting to perform deep-packet-inspection (DPI) to then charge me for sessions and services which they are not natively providing (beyond mere packet transport). This practice of using DPI to manage and/or charge for traffic streams, rears its ugly head every now and then. Not only is it inappropriate, it is also fundamentally misguided–as it ultimately leads to an arms race against your own user base (a quick review of the ongoing sagas involving the RIAA and the MPAA illustrates why attacking your own users can be a really bad business plan). Such approaches can only end in tears. In short, if you’re not directly involved in the end-to-end application service, then do not try to charge for it as you really have no idea of the value the end-users may associate with the service. If you try to charge everyone, the service (and end-users) will increasingly attempt to obfuscate themselves to prevent detection–ultimately this leads to an arms race of increasing complexity and one which the service providers will eventually lose. You are served much better by finding mechanisms whereby users who attribute value to certain applications are given strong incentives to identify those applications to you, so that they can be guaranteed high performance.

Another form of session based charging, is that of time charging. Again, in an appropriate context, time charging is perfectly acceptable (such as video-conferencing services, tele-presence services, real-time online gaming etc). However, it can also be used in completely inappropriate manners. For instance, trying to charge network access based on time is a concept that I hope is now well and truly buried in the past. The future is an always-on, always connected, always ready communications service. It always has been, it’s just the bell-heads never really understood the net-heads and vice versa. In short, time charge only for those services that make sense to be time charged.

It should be fairly clear, that regardless of whether or not some form of session based charging are used; there will always be a significant amount of end-user traffic that cannot be easily identified and valued using these mechanisms alone. These approaches are merely tools not solutions. We still need more.

The solutions that work:

So we’ve looked at the solutions that either fail miserably or are incomplete. What we’ll look at now is the simplest approach that typically works today, especially in service provider and wholesale markets. And then I’ll show how this approach can be extended to offer the greatest flexibility for discriminating price and performance. All of these solutions can be combined with many of the feature sets of the previous approaches. The reason why they work is because they directly incur charges proportional to actual resource consumption and this avoids the ‘tragedy of the commons’.

Throughput metering and charging is really an extension or generalization of the download/upload quota mechanism with the exception that it is typically much better received by the wholesale market and vis-à-vis it is not as well received nor understood by the retail end-user market. This approach works by continuously metering (monitoring and recording) usage over a period of time (usually a calendar month). A statistical formula is then applied across the readings to generate a summary usage, which is correspondingly charged. These formulas can be applied to combinations of the upstream and downstream traffic, can be applied as absolutes (charging by gigabyte-blocks-transferred – more common in retail scenarios) or as relative rates (charging by megabits-per-second – more common in wholesale scenarios).

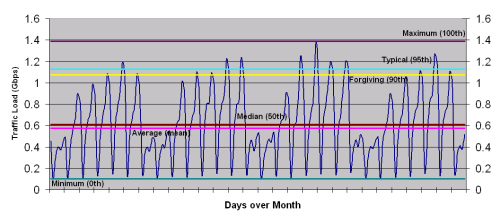

In the retail scenario where absolute quantities of transfer are charged, the following are typical approaches. Usually the consumer is committed to a minimum spend which is established by setting a threshold. Any transfer above the threshold is then usually charged in fixed blocks of excess or, in the case of CAP-plans, the service performance characteristics may be severely reduced (rate-shaped or deprioritised). In the wholesale scenario, similar thresholds exist, however the charging summary approaches can be quite varied and rich. Again, when usage exceeds some pre-determined threshold, either excess charges can be applied or services can be artificially performance constrained in order to prevent unfettered resource consumption. The most common statistical formulas for summarising usage data are illustrated in the figure below.

This figure represents a very typical, uncongested, monthly traffic graph of a combined residential and business retail service provider (RSP). Usually accounting data is captured every 5-15 minutes over the greater period being measured. Illustrated on the graph are the most common approaches for summarising this continuous rate data. Starting at the bottom and working our way to the top, they include: (a) the minimum (or 0th percentile measure) in this case it is equal to 100Mbps; (b) the median (or 50th percentile measure) in this case it is equal to 573Mbps; (c) the average (or true statistical mean) in this case it is equal to 612Mbps; (d) the forgiving peak (or 90th percentile measure) in this case equal to 1073Mbps; (e) the typical peak (or 95th percentile measure) in this case equal to 1129Mbps; and finally (f) the maximum peak (or 100th percentile measure) in this case equal to 1387Mbps. Now, just some background: the 95th percentile measure is probably the most reasonable for all parties, however the mean, median and 90th percentile are all reasonably commonplace. The advantage of using such approaches is that consumers are not heavily incentivised to avoid or constrain their peak rates and hence gain significant performance benefits. Interestingly, some wholesale providers utilise the 100th percentile or maximum approach and as can be seen, such an approach heavily penalises the consumer, often resulting in them artificially constraining and reducing their traffic to lower peaks. This not only impacts their performance but it also affects the statistical Erlang gain benefit to the wholesale service provider. It is extreme and very unforgiving, definitely not industry or world best practice.

The best-practice solution:

Finally we reach the last proposal which is really just a further generalisation and extension of the throughput metering and charging from above. This approach combines all of the best features available from all solutions in an optimal compromise and meets all four of our pillar requirements with flying colours. But it brings with it a very important proviso to make it work.

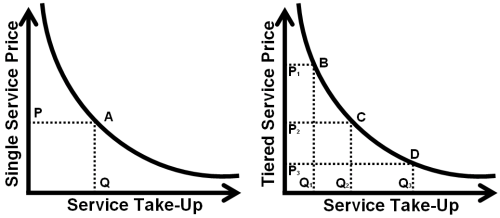

Tiered services with tiered pricing is the preferred mechanism for balancing all of the desirable features of the above whilst working around many of their shortcomings or limitations. Instead of offering flexibility within just a single dimension of traffic characteristics, the tiered performance approach aims to offer flexibility across a range of clearly differentiated performance characteristics which can then be priced in tiered manner as well. By avoiding being constrained to just a single performance characteristic, an incumbent carrier can offer and maintain a number of distinct services to a more competitive market place. If they do not offer this capability themselves, it is inevitable that a competitive service aggregator will be forced to enter the market to offer the capability. This is not desirable as it can add significant additional costs to the end-user services. This may be best illustrated using a common economic analysis.

The primary purpose of tiered pricing is to capture the market’s consumer surplus, it also includes support for social welfare through increased output (and this is the case with incumbent carriers). In a market with a single clearing price, some customers (usually the ones with low price elasticity) would be willing to pay more than the single market price, whilst others (the social welfare group) are not willing or possibly able to pay the single market price. Market pricing introduces the flexibility necessary to independently target each market segment at an appropriate price threshold. The diagram below illustrates the various effects of being able to tier pricing to various groups. In the single service price case, the addressable market for price (P) is represented by the corresponding demand for services (Q). The revenue opportunity is represented roughly by the area of the square. In the tiered service price case, individual prices P1, P2 and P3 are offered (preferably in a manner that appeals to each market segment) and subsequently demand for services as represented by Q1, Q2 and Q3 respectively, increases. More importantly, not only does the total addressable market increase, output increases and revenues increase. It is entirely possible that under the right set of circumstances and appropriate management, that the entire market will be able to afford some form of service (full social welfare support) without compromising the long-term viability of the producer.

By choosing to leverage base infrastructure capabilities such as differentiated performance, a

carrier can price differentiated services directly into the market. This allows the first level of consumers, the retail service providers and or aggregators to themselves select which entry points they desire and which market segments they are going to differentiate them into. Hence a rich portfolio of aggregators will exist that can leverage the differentiated capabilities and differentiated prices to develop market based value opportunities. This increases the uptake, increases margins, increases total market efficiency and reduces costs to consumers that have high price elasticity. This approach emulates to some degree, the traditional approach adopted by incumbent Telco’s who would normally have value-based price each legacy overlay network individually.

For the record, tiered pricing is very common across multiple industries, as the following list of

examples illustrates:

- Volume based wholesale price discrimination where larger bulk quantity purchases are offered at a reduced per unit price (many wholesalers and component retailers). This could also include peak and off-peak pricing (utilities) etc.

- Premium product differentiation, whereby a similar product is marketed as premium and sold at a higher price (supermarkets and grocery stores). Other examples include the automobile industry (limited edition paint releases) and the airline industry (weekend away conditions, economy, business, first …) etc.

- Segmentation pricing whereby children, students and pensioners are offered reduced prices when compared with working adults (public transport, entertainment etc).

- Other examples include segmentation by gender (venue cover charges), occupation (education, volunteer, police force etc) and geography (local, interstate, international).

Without such tiered pricing strategies, many products and services would not be as affordable as they currently are for many people.

The proviso: There is an important proviso regarding ‘tiered services’ and their implementation. As I’ve covered before in my article “Which Takes Precedence: Your New NGN or Your Current Business Model“, once you decide that it is highly desirable to be able to offer tiered services at tiered prices, it becomes mandatory that you implement your network aggregation, in particular your class of service (CoS) queuing, in a very specific manner. To ensure that the classes are not only relative in priority but actually perform differentially under various network load and utilisation conditions, requires that all traffic from all sources of a common class eventually aggregate to share a common forwarding block (ie. same network path, same interface queue and same egress scheduler). This ensures that all users of a given class commonly share all the network performance characteristics of that class at a given instant in time. This criteria protects the carriers ability to then differentially price such classes, safe in the knowledge that network users will be both unable to reliably arbitrage the system and/or unfairly leverage the network services beyond their purchased QoS performance. It also naturally and almost unavoidably leads to a path of four abstract classes of service, each with in-profile and out-of-profile markings (why you ask–well you’ll need to hassle me for those details, but the reasoning is pretty bullet-proof). Now, due to limitations within both Ethernet and MPLS headers for signaling CoS (especially across interconnect boundaries), it is necessary to constrain the CoS identifiers to utilising 3 bits only, which provides a hard limited capability for describing, at most, 8 differential forwarding characteristics. This is why abstract forwarding behaviours are needed for classification as opposed to individual application or service constructs. The abstract behaviours are best described as:

- Expedited: A CoS suitable for carrying priority, real-time and interactive traffic such as voice, video conferencing services, online gaming

etc; - Streaming: a CoS suitable for large scale streaming of video, streaming of audio, reliable bulk and transactional messaging/transfer, network storage etc;

- Economy: a CoS for basic economy access services (non-premium Internet and background services) and lastly;

- Premium: a CoS that sits somewhere in between the aforementioned two best services and the cheapest service which provides a business grade, premium multi-use capability. It does this by trading some burst discard to ensure small queues.

Combined, these classes implement the holy grail of supporting multiple IP NGN grade of service (GoS) offerings. Practically every type of application can be cost justified into these abstract traffic classes based on the desired GoS of the user. And that is the magic observation–that the mapping of applications into a CoS is based on a value-assessment by the user (not the application) for the GoS required. I.e. an assessment of how much the user is willing to pay for their application to receive some sort of guaranteed performance from the network. Each user will have a different threshold of cost justification for the same applications, which is subject to change over both time and context, so there really is no ‘one size fits all’.

Tiered traffic classes also offer one final and probably the biggest advantage overall. Once you have logically tiered your next generation packet network, you are left with the flexibility to change the way you price each class and even each customer without restriction. You can choose to adopt any, or even all, of these approaches that have been described and apply them to any traffic streams, even simultaneously. In other words, this implementation methodology provides you with complete business freedom to market your products any way you want. Now isn’t that the degree of flexibility and capability that every business desires?

Conclusion:

Ok, the end is near. A final point I’d like to make is to highlight that the end-consumer KNOWS precisely what is of value to them and what is not, they KNOW exactly how much they are willing to pay for that value and all they NEED is a mechanism that allows them to efficiently signal that value proposition to BOTH the retail-service provider AND the intermediate carriers. This is the real problem that needs to be addressed, as currently end-users have only limited options for signaling these value propositions, and even then can usually only signal them independently (ie. either to the underlying carrier by choosing a premium access product or to the end-retail-service provider by subscribing to and paying for the service/content being accessed). What we really need, is the right glue to bring these two together (automatically). But that is the topic of a whole other post.

The tiered pricing approach illustrated here provides a fundamental underlying mechanism required to support this rich and automatic signaling. It is not the whole story however.

Recent Comments