Professional Documents

Culture Documents

Hand Gesture Vocaliser For Deaf

Original Title

Copyright

Available Formats

Share this document

Did you find this document useful?

Is this content inappropriate?

Report this DocumentCopyright:

Available Formats

Hand Gesture Vocaliser For Deaf

Copyright:

Available Formats

Volume 7, Issue 7, July – 2022 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Hand Gesture Vocaliser for Deaf

Faisal Qayoom, Balaji N, Gurukiran S, Sourabh S. N.

U G Students Dept. of Electronics and Communication Engineering,

Jyothy Institute of Technology, Bangalore,

Karnataka, India

Abstract -Deaf-mute humans need to talk with regular Gesture Vocalizer is a tool that's being designed to

human beings for their everyday ordinary. The deaf-mute permit the communication of various deaf and blind societies

people use sign language to talk with other humans. and their conversation with the regular humans. The system

However, it is viable simplest for the ones who have may be dynamically reconfigured as a clever tool that may

passed through unique training to recognize the work for all styles of sign languages. Gesture vocalizer is

language. Sign language uses hand gestures and different essentially a data glove and a microcontroller that can

means of non-verbal behaviours to deliver their supposed stumble on nearly all of the movements of a hand and convert

meaning. It includes combining hand shapes, orientation a few certain moves into human recognizable voice.It is

and hand actions, hands or body movement, and facial based on making an electronic device that could translate

expressions concurrently, to fluidly explicit speaker's sign language into speech so as to make the communication

thoughts. take place between the mute communities with the overall

public viable.

The project is based on the need of developing an

electronic glove that can translate sign language into The main components used here are flex sensors,

speech in order to lower the barrier in communication gloves, LCD display, and Arduino UNO speech synthesis.

between the mute communities and the normal people. Features and the architecture of the Arduino UNO Is

explained in the next section.Flex sensor is largely a variable

A Wireless electronic gloves is used, which is resistor whose terminal resistance increases whilst the itis

everyday material driving gloves fitted with flex sensors bent. So this sensor is used to sense the changes in linearity.

along with the every finger. Mute human beings can use LCD is a flat panel display technology commonly used in

the gloves to perform hand gesture which can be TVs and computer monitors. It is also used in screens for

converted into speech in order that regular humans can mobile devices, such as laptops, tablets, and smartphones. A

apprehend their expressions. USB cable is used to upload then program to Arduino UNO.

A Bluetooth module is used here to communicate with the

Human beings have interaction with each other to mobile phones. The gloves consists of flex sensors attached

deliver their ideas and reviews to the people around them. to every finger of the glove to sense the detection of the

But this isn't always the case for deaf-mute people. Sign bending. The bending of the fingers is detected and as

language paves the manner for deaf-mute people to programmed the for particular combinations of the input

communicate. Through signal language verbal exchange given to the Arduino the respective output will be shown.

is feasible for a deaf-mute person without the manner of When you want to measure the flex or bent or angle change

acoustic sounds. of any instrument the flex sensor internal resistance changes

Thus, in order to bridge this gap, this project intends almost linearly with its flex angle.

to implement a real-time video processing-based speech

assistant system for the speech impaired

I. INTRODUCTION

Historically the term deaf-mute is either the person who

can speak but is unable to hear or both i.e deaf and unable to

talk. Deaf humans face many irritations that restrict their

ability to do their duties.

Communication is the most fundamental and critical

shape of interplay with each person for this reason for

interacting with deaf and dumb humans sign language or

gestures are used.The dumb humans use their general signal

language which isn't always effortlessly understandable by

using commonplace humans. Also there's no standardised

sign language defined internationally. Vocalisers convert the

sign language into voice that is without problems

understandable through blind and everyday people. Fig. 1: Indian Sign Language

IJISRT22JUL559 www.ijisrt.com 706

Volume 7, Issue 7, July – 2022 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

II. PLANNED SYSTEM

Fig. 3: Arduino UNO

B. FLEX SENSORS:

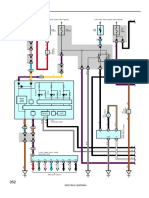

Fig. 2: Block diagram Flex sensors (Fig-4) are the sensors that degree the

amount of deflection or bending. They act as the supply of

Block diagram of hand gesture vocalizer for deaf is as input to the microcontroller.

shown in Fig-2. The device has hardware and software

program. Hardware part consists of flex sensors, Arduino,

LCD display, Bluetooth module. Software consists of the

programming of Arduino in keeping with the gestures. This

gadget is split into three elements:

Gesture enter

Processing the records

Voice output using smartphone

Gesture input:Sensors are located at the hand of deaf

human beings which converts the parameter like finger

bend hand position perspective into electrical sign and

provide it to Atmega 328 controller and controller take Fig. 4: Flex sensor

movement in line with the signal.

C. LCD 16×2:

Processing the records: The output of flex sensors is Liquid Crystal Display (Fig-5) display is an electronic

converted into digital form by using inbuilt analog to device. A 16x2 LCD presentations 16 characters according

digital converter of Arduino UNO. Predefined gestures to line and there are 2 such strains. This LCD includes

with corresponding messages are saved in the database Command registers and Data registers. The command

of the microcontroller. Arduino UNO checks whether registers keep the command commands given to the LCD.

the enter voltage from the flex sensors exceeds the edge The information registers shops the records to be displayed

price this is stored inside the database. on the LCD. It is used for consumer interface.

Voice output using smartphone:The output from the

Arduino is sent to LCD and smartphone via Bluetooth

module. LCD displays the message that is assigned to

the gesture within the program. Speech signal is

produced using text to speech converter application

through mobile phone.

III. HARDWARE COMPONENTS REQUIRED

A. ARDUINO UNO:

Arduino UNO is a microcontroller board based on

ATmega328P. It consists of 14 virtual input/output pins (of

which 6 can be used as PWM outputs), 6 analog inputs, a

sixteen MHz ceramic resonator, a USB connection, a power

jack, an ICSP header and a reset button. It incorporates the

whole lot needed to assist the microcontroller; truly join it

to a pc with a USB cable or power it with a AC-to-DC Fig. 5: LCD

adapter or battery to getcommenced.

IJISRT22JUL559 www.ijisrt.com 707

Volume 7, Issue 7, July – 2022 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

D. BLUETOOTH (HC-05): A. GLOVES:

HC-05 is a Bluetooth module(Fig-6) that is designed for The gloves consist of the flex sensors attached to every

wireless communication. This module can be used in a finger of the gloves to sense the detection of bending in the

grasp or slave configuration. Bluetooth serial modules fingers taking into account the change in the value of the

allow all serial enabled gadgets to speak with each other the resistance caused due to the bending of the fingers

use of Bluetooth. accordingly.

B. FLEX SENSORS:

Flex sensors are usually available in two sizes. One is

2.2 inch and another is 4.5 inch . Although the sizes are

different the basic function remains the same. They are also

divided based on resistance. When you need to degree the

flex or bent or angle the flex sensor inner resistance

modifies nearly linearly with its flex attitude.

So the flex sensors also called as flexible

potentiometer is fixed on the gloves to detect the bend in

the fingers of the user.

C. ARDUINO WITH GESTURE DETECTION:

Fig. 6: Bluetooth HC-05 We are using Arduino UNO for interfacing all the

hardware such as LCD , Flex sensors and Bluetooth .By

SOFTWARE REQUIRED using the IDE software we have programmed the particular

set of combinations for taking the input caused due to

E. ARDUINO IDE: bending of flex sensors and give visual and audio output

Arduino UNO is a microcontroller board based on the from the LCD and the Bluetooth device by interfacing with

ATmega328p. It has 14 digital input/output pins (which 6 can Arduino.

be used as PWM outputs), and six analog inputs , 16MHz

quartz crystal, a USB connection, a power jack, an ICSP D. LCD MODULE:

header and a reset button and power by a AC to DC adapter We have used 16x2 LCD displays where we can give

or battery to get started. visual output till 32 characters. The LCD display is

interfaced with the Arduino UNO board and the particular

IV. CIRCUIT DIAGRAM combination that is proposed will be displayed when the

user uses the glove.

E. SPEECH SYNTHESIS:

We have used a Bluetooth module which is interfaced

with the Arduino UNO which will give an audio output

using the smartphone device according to the input given

by the user.

V. WORKING PRINCIPLE

TheGesture vocalizer for deaf & mute people interaction

some sensors are placed on the hand of deaf people which

converts the parameter like finger bend hand position

angle into electrical signal and provide it to Atmega 328

controller and controller take action according to the

signand transformed into matching speech and displayed

on LCD.

The text output from the Arduino is sent to LCD and

smartphone through Bluetooth module. LCD shows the

message that is apportioned to the gesture in the program.

Speech signal is produced using text to speech converter

application through mobile phone.

Fig. 7: Circuit Diagram

IJISRT22JUL559 www.ijisrt.com 708

Volume 7, Issue 7, July – 2022 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

VI. FLOW CHART

Fig. 9: Hardware Implemented

Fig. 8: Flow Chart

The user makes the shape of required sigh the gesture

input is given to the Arduino.

As we have placed sensors on gloves which are used by

the deaf person, so whenever he/she has to say something

he/she will just make the sign and accordingly the flex

resistance changes and produces the corresponding output

which will be shown by the display and speech using

smartphone.

Arduino UNO matches the motion within the program

and produces the text output which is then sent to mobile

phone using Bluetooth module HC-05 in which the

application converts the text into speech and produces the

voice output

VII. IMPLEMENTATION AND RESULTS

Fig ix shows the setup of the whole project, where the LCD

display, Bluetooth module is interfaced to the

Arduinoboard.

Microcontroller is programmed accordingly using the

Arduino IDE software

Four flex sensors are connected to the Arduino board, the

Bluetooth module is connected to the Bluetooth app using

smartphone to provide the audio output.

Fig 10 shows the smartphone result.

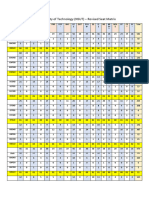

Fig 11 shows some gestures with parallel messages

Fig.10: Smartphone Result

IJISRT22JUL559 www.ijisrt.com 709

Volume 7, Issue 7, July – 2022 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

SL. GESTURE OUTPUT The cost of the Sensors is also a problem, as little

NO INPUT pressure on the sensors can cause the sensor to burn

and would require replacement.

1.

IX. CONCLUSION AND FUTURE SCOPE

A. CONCLUSIONS

The design and implementation of Hand Gesture

Vocalizer For Deaf System proved to be a challenging,

rewarding and exciting experience. While keeping the

2. objectives of the course in mind, we were able to

successfully complete the integration of Hand glove using

hardware components like sensors, Arduino UNO,

Bluetooth and software (Arduino IDE. Since the project is

Arduino UNO based Smart feature and automations that

can be configured for different applications easily.

The project is designed primarily to be used by Deaf

people to help them communicate with regular people

easily.

3.

B. FUTURE SCOPE

The further enhancements which can be made in the

Hand Gesture Vocalizer for improved functionality are as

follows

Inclusion of more gestures can be made possible by

using advanced microcontrollers like Raspberry Pi Or

even more advanced software which could possibly

include image processing which may analyses the hand

4. movements of the Deaf & Dumb language and give

better result.

The Automation can be made to include even more

functionalities which could possibly help control all the

equipment in a house/working facility. It can be made

Fig. 11: some gestures with parallelmessages. more compact and portable for easy carry and use. It can

be further integrated with mobile device for improved

VIII. APPLICATIONS, ADVANTAGES AND communication during its use.

CHALLENGES

REFERENCES

A. APPLICATIONS

It can be used by the Deaf and Dumb community in [1.] Syed Faiz Ahmed, Syed Muhammad Baber Ali, Sh.

order to increase the interaction in the society. Saqib Munawwar Qureshi, “Electronic Speaking

The prototype can further be developed for the Glove for Speechless Patients A Tongue to a Dumb”,

people who are paralyzed because of stroke or IEEE Conference on Sustainable Utilization and

accident, in order to help them easily communicate Development in Engineering and Technology, pp. 56-

with little effort. 60, 2010,

The device functionality can be expanded to increase [2.] Conference Paper (PDF Available) · February 2008

automation functions so as to make the life of people Conference: Proceedings of the 7th WSEAS

who are physically challenged easy. International Conference on Signal Processing,

Robotics and Automation

B. ADVANTAGES [3.] Kazunori Umeda Isao Furusawa and Shinya Tanaka,

It is a social motive project. “Recognition of Hand Gestures Using Range Images”,

Deaf people can easily talk with ordinary people. Proceedings of the 1998 IEEW/RSJ International

Implementation is Easy. Conference on intelligent Robots and Systems

Provides Audio as well as Visual output Victoria,

[4.] Sushmita Mitra and Tinku Acharya,”Gesture

C. CHALLENGES Recognition: A Survey”, IEEE TRANSACTIONS ON

One of the major drawbacks of the project (i.e.., SYSTEMS, MAN, AND CYBERNETICS— PART

Hand Gesture Vocalizer) is that the sensors are very C: APPLICATIONS AND REVIEWS, VOL. 37, NO.

sensitive and can cause varied amount of variation in 3, MAY 2007, pp. 311-324

their output values at the time of the bend. [5.] Jean-Christophe Lementec and Peter Bajcsy,

“Recognition of Am Gestures Using Multiple

IJISRT22JUL559 www.ijisrt.com 710

Volume 7, Issue 7, July – 2022 International Journal of Innovative Science and Research Technology

ISSN No:-2456-2165

Orientation Sensors: Gesture Classification”, 2004

IEEE Intelligent Transportation Systems Conference

Washington, D.C., USA, October 34, 2004, pp.965-

[6.] Seong-Whan Lee, “Automatic Gesture Recognition

for Intelligent Human-Robot Interaction” Proceedings

of the 7th International Conference on Automatic Face

and Gesture Recognition (FGR’06) ISBN # 0-7695-

2503-2/06

IJISRT22JUL559 www.ijisrt.com 711

You might also like

- MX-6070V, MX-5050N, MX-5070N, MX-6050N, MX-6070N Service ManualDocument457 pagesMX-6070V, MX-5050N, MX-5070N, MX-6050N, MX-6070N Service Manualkenny2021No ratings yet

- Colonialism and Resistance - A Brief History of Deafhood - Paddy LaddDocument11 pagesColonialism and Resistance - A Brief History of Deafhood - Paddy Laddאדמטהאוצ'וה0% (1)

- Advanced Topics On Computer Vision Control and Robotics in MechatronicsDocument431 pagesAdvanced Topics On Computer Vision Control and Robotics in MechatronicsefrainNo ratings yet

- Simulado IDocument5 pagesSimulado IElisa100% (1)

- Poetics and Politics of Deaf American LiteratureDocument10 pagesPoetics and Politics of Deaf American LiteratureForest SponsellerNo ratings yet

- Carolyn E. Williamson - Black Deaf Students - A Model For Educational Success (2007)Document228 pagesCarolyn E. Williamson - Black Deaf Students - A Model For Educational Success (2007)kronzeNo ratings yet

- 05 MW Pv-Solar Power Plant Brochure Dec 2013Document4 pages05 MW Pv-Solar Power Plant Brochure Dec 2013Muhammad Asif ShafiqNo ratings yet

- LibrasDocument7 pagesLibrasDaniele SouzaNo ratings yet

- Deaf Students Solving Mathematical ProblemDocument21 pagesDeaf Students Solving Mathematical ProblemAjay AttaluriNo ratings yet

- Electronic Speaking System For Speech Impaired PeopleDocument4 pagesElectronic Speaking System For Speech Impaired Peoplekarenheredia18No ratings yet

- Sign Language Glove Bluetooth A To ZDocument9 pagesSign Language Glove Bluetooth A To Zjeffmagbanua090119No ratings yet

- Assistive Devices For Visually, Audibly and Verbally Impaired PeopleDocument7 pagesAssistive Devices For Visually, Audibly and Verbally Impaired PeopleIJRASETPublicationsNo ratings yet

- Hand Gesture VocalizerDocument4 pagesHand Gesture VocalizerInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Magic Hands For Deaf and Dumb PeopleDocument6 pagesMagic Hands For Deaf and Dumb PeopleVIVA-TECH IJRINo ratings yet

- Smart Glove For Deaf and Dumb PatientsDocument4 pagesSmart Glove For Deaf and Dumb PatientsVIVA-TECH IJRINo ratings yet

- PresentationDocument14 pagesPresentationskills xpertNo ratings yet

- ReportDocument20 pagesReportskills xpertNo ratings yet

- JETIR2304390Document8 pagesJETIR2304390veereshangadi750No ratings yet

- 11 X October 2023Document6 pages11 X October 2023Pratham DubeyNo ratings yet

- Hand Assistive Device For Physically Challenged PeopleDocument6 pagesHand Assistive Device For Physically Challenged PeopleIJRASETPublicationsNo ratings yet

- IEEE Conf Published PaperDocument7 pagesIEEE Conf Published PaperVikas RautNo ratings yet

- A Novel Approach of Sensorized Gloves For Paralyzed Person.Document16 pagesA Novel Approach of Sensorized Gloves For Paralyzed Person.Pranik K NikoseNo ratings yet

- Irjet V5i4320Document4 pagesIrjet V5i4320Vishnu PriyaNo ratings yet

- Gesture VocalizerDocument5 pagesGesture VocalizerIJMTST-Online JournalNo ratings yet

- Smart Glove Translates Sign Language in Real TimeDocument8 pagesSmart Glove Translates Sign Language in Real TimeAbhishek G MNo ratings yet

- A Review Paper On Communicating GlovesDocument5 pagesA Review Paper On Communicating GlovesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Gesture Vocalizer: Thanthai Periyar Government Institute of Technology Vellore - 02Document28 pagesGesture Vocalizer: Thanthai Periyar Government Institute of Technology Vellore - 02SandhiyaNo ratings yet

- 381 - Hand Gesture Based Communication For Military and Patient ApplicationDocument5 pages381 - Hand Gesture Based Communication For Military and Patient ApplicationBhaskar Rao PNo ratings yet

- Embedded Based Hand Talk Assisting System For Deaf and Dumb IJERTV3IS030469Document4 pagesEmbedded Based Hand Talk Assisting System For Deaf and Dumb IJERTV3IS030469gopinathNo ratings yet

- Sat - 68.Pdf - Smart Glove For Sign Language Translation Using IotDocument11 pagesSat - 68.Pdf - Smart Glove For Sign Language Translation Using IotVj KumarNo ratings yet

- Corrected Project 123Document53 pagesCorrected Project 123Sai KiranNo ratings yet

- T A D G G V V: HE Mazing Igital Loves That IVE Oice To The OicelessDocument10 pagesT A D G G V V: HE Mazing Igital Loves That IVE Oice To The OicelesszeeshanNo ratings yet

- Indian Sign Languages Using Flex Sensor GloveDocument3 pagesIndian Sign Languages Using Flex Sensor GloveseventhsensegroupNo ratings yet

- Projrct 9Document5 pagesProjrct 9Saurav GandhiNo ratings yet

- Part 4Document40 pagesPart 4Ravi Kumar100% (1)

- Sign Language Gloves 303-308, Tesma0802, IJEASTDocument6 pagesSign Language Gloves 303-308, Tesma0802, IJEASTjeffmagbanua090119No ratings yet

- IntroductionDocument4 pagesIntroductionkeith lorraine lumberaNo ratings yet

- Introduction To Innovative Projects: Technology To Help The Differently-AbledDocument6 pagesIntroduction To Innovative Projects: Technology To Help The Differently-AbledSatadru DasNo ratings yet

- Cursor Movement Using Hand Gestures in Python and Arduino UnoDocument7 pagesCursor Movement Using Hand Gestures in Python and Arduino UnoIJRASETPublicationsNo ratings yet

- Sign To Speech Converter Gloves For Deaf and Dumb PeopleDocument4 pagesSign To Speech Converter Gloves For Deaf and Dumb PeopleInternational Journal of Application or Innovation in Engineering & ManagementNo ratings yet

- Voice Based E-Notice Board Using AndroidDocument9 pagesVoice Based E-Notice Board Using Androidgoutham p100% (1)

- Speaking Hand Using Raspberry Pi 3Document3 pagesSpeaking Hand Using Raspberry Pi 3Editor IJTSRDNo ratings yet

- Paper 1415Document9 pagesPaper 141524-ECE-MATHIMALA-PNo ratings yet

- Aa PORTABLE ASSISTIVE DEVICE FOR DEAF DUMB AND BLIND USING AIDocument4 pagesAa PORTABLE ASSISTIVE DEVICE FOR DEAF DUMB AND BLIND USING AIMohammed Abdul Razzak100% (2)

- Glove 2Document7 pagesGlove 2Technos_IncNo ratings yet

- Control With Hand GesturesDocument18 pagesControl With Hand GesturesAjithNo ratings yet

- Arduino Based Smart Glove PRO 2Document23 pagesArduino Based Smart Glove PRO 2faisal aminNo ratings yet

- Touchless TouchscreenDocument5 pagesTouchless Touchscreenshubham100% (1)

- Final Fyp - PDFDocument45 pagesFinal Fyp - PDFSanhita SenNo ratings yet

- Nov 2022Document4 pagesNov 2022sushrathsshettyNo ratings yet

- Gesture Controlled Video Playback: Overview of The ProjectDocument4 pagesGesture Controlled Video Playback: Overview of The ProjectMatthew BattleNo ratings yet

- Motion-Sensing Home Automation With VocalizationDocument7 pagesMotion-Sensing Home Automation With VocalizationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Microcomputer and Systems: Project ProposalDocument3 pagesMicrocomputer and Systems: Project ProposalShoaib NadeemNo ratings yet

- Smart Helmet Based On IoT TechnologyDocument5 pagesSmart Helmet Based On IoT Technologymarlon_tayagNo ratings yet

- Gesture Controlled Magical Keyboard Using ArduinoDocument9 pagesGesture Controlled Magical Keyboard Using ArduinoIJRASETPublications100% (1)

- Singaram 2021 J. Phys. Conf. Ser. 1916 012201Document5 pagesSingaram 2021 J. Phys. Conf. Ser. 1916 012201Vishnu PriyaNo ratings yet

- Deaf-Mute Communication InterpreterDocument6 pagesDeaf-Mute Communication InterpreterEditor IJSETNo ratings yet

- Portable Assistive Device For Deaf Dumb and Blind Using AiDocument4 pagesPortable Assistive Device For Deaf Dumb and Blind Using AiMohammed Abdul RazzakNo ratings yet

- Touchless Door Bell Using Arduino UnoDocument12 pagesTouchless Door Bell Using Arduino UnoIJRASETPublicationsNo ratings yet

- Low Cost, Wearable Alert Notification System For Deaf and Blind PeopleDocument5 pagesLow Cost, Wearable Alert Notification System For Deaf and Blind PeopleStephen mwangiNo ratings yet

- Smart Wearable For Speech Impairment (Group No.: 99) AbstractDocument5 pagesSmart Wearable For Speech Impairment (Group No.: 99) Abstract291SWAYAM BEHERANo ratings yet

- IJRPR12673Document7 pagesIJRPR12673veereshangadi750No ratings yet

- 63fcc603799b24 06976942Document11 pages63fcc603799b24 06976942Phạm Quang MinhNo ratings yet

- Cyber Security Awareness and Educational Outcomes of Grade 4 LearnersDocument33 pagesCyber Security Awareness and Educational Outcomes of Grade 4 LearnersInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Factors Influencing The Use of Improved Maize Seed and Participation in The Seed Demonstration Program by Smallholder Farmers in Kwali Area Council Abuja, NigeriaDocument6 pagesFactors Influencing The Use of Improved Maize Seed and Participation in The Seed Demonstration Program by Smallholder Farmers in Kwali Area Council Abuja, NigeriaInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Parastomal Hernia: A Case Report, Repaired by Modified Laparascopic Sugarbaker TechniqueDocument2 pagesParastomal Hernia: A Case Report, Repaired by Modified Laparascopic Sugarbaker TechniqueInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Smart Health Care SystemDocument8 pagesSmart Health Care SystemInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Blockchain Based Decentralized ApplicationDocument7 pagesBlockchain Based Decentralized ApplicationInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Unmasking Phishing Threats Through Cutting-Edge Machine LearningDocument8 pagesUnmasking Phishing Threats Through Cutting-Edge Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Study Assessing Viability of Installing 20kw Solar Power For The Electrical & Electronic Engineering Department Rufus Giwa Polytechnic OwoDocument6 pagesStudy Assessing Viability of Installing 20kw Solar Power For The Electrical & Electronic Engineering Department Rufus Giwa Polytechnic OwoInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- An Industry That Capitalizes Off of Women's Insecurities?Document8 pagesAn Industry That Capitalizes Off of Women's Insecurities?International Journal of Innovative Science and Research TechnologyNo ratings yet

- Visual Water: An Integration of App and Web To Understand Chemical ElementsDocument5 pagesVisual Water: An Integration of App and Web To Understand Chemical ElementsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Insights Into Nipah Virus: A Review of Epidemiology, Pathogenesis, and Therapeutic AdvancesDocument8 pagesInsights Into Nipah Virus: A Review of Epidemiology, Pathogenesis, and Therapeutic AdvancesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Smart Cities: Boosting Economic Growth Through Innovation and EfficiencyDocument19 pagesSmart Cities: Boosting Economic Growth Through Innovation and EfficiencyInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Parkinson's Detection Using Voice Features and Spiral DrawingsDocument5 pagesParkinson's Detection Using Voice Features and Spiral DrawingsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Impact of Silver Nanoparticles Infused in Blood in A Stenosed Artery Under The Effect of Magnetic Field Imp. of Silver Nano. Inf. in Blood in A Sten. Art. Under The Eff. of Mag. FieldDocument6 pagesImpact of Silver Nanoparticles Infused in Blood in A Stenosed Artery Under The Effect of Magnetic Field Imp. of Silver Nano. Inf. in Blood in A Sten. Art. Under The Eff. of Mag. FieldInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Compact and Wearable Ventilator System For Enhanced Patient CareDocument4 pagesCompact and Wearable Ventilator System For Enhanced Patient CareInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Air Quality Index Prediction Using Bi-LSTMDocument8 pagesAir Quality Index Prediction Using Bi-LSTMInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Predict The Heart Attack Possibilities Using Machine LearningDocument2 pagesPredict The Heart Attack Possibilities Using Machine LearningInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Investigating Factors Influencing Employee Absenteeism: A Case Study of Secondary Schools in MuscatDocument16 pagesInvestigating Factors Influencing Employee Absenteeism: A Case Study of Secondary Schools in MuscatInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Relationship Between Teacher Reflective Practice and Students Engagement in The Public Elementary SchoolDocument31 pagesThe Relationship Between Teacher Reflective Practice and Students Engagement in The Public Elementary SchoolInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Diabetic Retinopathy Stage Detection Using CNN and Inception V3Document9 pagesDiabetic Retinopathy Stage Detection Using CNN and Inception V3International Journal of Innovative Science and Research TechnologyNo ratings yet

- An Analysis On Mental Health Issues Among IndividualsDocument6 pagesAn Analysis On Mental Health Issues Among IndividualsInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Implications of Adnexal Invasions in Primary Extramammary Paget's Disease: A Systematic ReviewDocument6 pagesImplications of Adnexal Invasions in Primary Extramammary Paget's Disease: A Systematic ReviewInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Harnessing Open Innovation For Translating Global Languages Into Indian LanuagesDocument7 pagesHarnessing Open Innovation For Translating Global Languages Into Indian LanuagesInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Keywords:-Ibadhy Chooranam, Cataract, Kann Kasam,: Siddha Medicine, Kann NoigalDocument7 pagesKeywords:-Ibadhy Chooranam, Cataract, Kann Kasam,: Siddha Medicine, Kann NoigalInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Utilization of Date Palm (Phoenix Dactylifera) Leaf Fiber As A Main Component in Making An Improvised Water FilterDocument11 pagesThe Utilization of Date Palm (Phoenix Dactylifera) Leaf Fiber As A Main Component in Making An Improvised Water FilterInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Impact of Digital Marketing Dimensions On Customer SatisfactionDocument6 pagesThe Impact of Digital Marketing Dimensions On Customer SatisfactionInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Dense Wavelength Division Multiplexing (DWDM) in IT Networks: A Leap Beyond Synchronous Digital Hierarchy (SDH)Document2 pagesDense Wavelength Division Multiplexing (DWDM) in IT Networks: A Leap Beyond Synchronous Digital Hierarchy (SDH)International Journal of Innovative Science and Research TechnologyNo ratings yet

- Advancing Healthcare Predictions: Harnessing Machine Learning For Accurate Health Index PrognosisDocument8 pagesAdvancing Healthcare Predictions: Harnessing Machine Learning For Accurate Health Index PrognosisInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- The Making of Object Recognition Eyeglasses For The Visually Impaired Using Image AIDocument6 pagesThe Making of Object Recognition Eyeglasses For The Visually Impaired Using Image AIInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Formulation and Evaluation of Poly Herbal Body ScrubDocument6 pagesFormulation and Evaluation of Poly Herbal Body ScrubInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Terracing As An Old-Style Scheme of Soil Water Preservation in Djingliya-Mandara Mountains - CameroonDocument14 pagesTerracing As An Old-Style Scheme of Soil Water Preservation in Djingliya-Mandara Mountains - CameroonInternational Journal of Innovative Science and Research TechnologyNo ratings yet

- Electrical DetailsDocument4 pagesElectrical Detailsdppl.mepNo ratings yet

- k2601 enDocument12 pagesk2601 enRobert MasaNo ratings yet

- AN Balachandran PDFDocument10 pagesAN Balachandran PDFmilivojNo ratings yet

- Air Conditioning: 2005 PRIUS (EWD599U)Document12 pagesAir Conditioning: 2005 PRIUS (EWD599U)Sherman KillerNo ratings yet

- Powerlogic™ Ion7550 / Ion7650: Energy and Power Quality MeterDocument16 pagesPowerlogic™ Ion7550 / Ion7650: Energy and Power Quality MeterFlavio Tonello TavaresNo ratings yet

- 49233-EN DM1000 Data Sheet PDFDocument4 pages49233-EN DM1000 Data Sheet PDFLaraNo ratings yet

- Thermal Overload ProtectionDocument5 pagesThermal Overload ProtectionJigme TamangNo ratings yet

- Users Manual: Industrial ScopemeterDocument96 pagesUsers Manual: Industrial Scopemeterene sorinNo ratings yet

- Max-E1 MotorDocument6 pagesMax-E1 MotorDavidGomezNo ratings yet

- NSUT Revised Seat MatrixDocument3 pagesNSUT Revised Seat Matrixrohit kumar rajNo ratings yet

- IC Component Sockets W Liu M Pecht Wiley 2004Document227 pagesIC Component Sockets W Liu M Pecht Wiley 2004root 2No ratings yet

- Philips RF Manual 4th Edition AppendixDocument91 pagesPhilips RF Manual 4th Edition Appendixshakira_xiNo ratings yet

- Catalogo OmronDocument71 pagesCatalogo OmronPaola NoMás DicenNo ratings yet

- Elac s10 DatenblattDocument2 pagesElac s10 DatenblattAmador AguilaNo ratings yet

- Servo User Manual LITE en PDFDocument76 pagesServo User Manual LITE en PDFleon3388No ratings yet

- Bahir Dar UniversityDocument47 pagesBahir Dar UniversityAdisu ZiNo ratings yet

- Honeywell Vista 21ip Install GuideDocument96 pagesHoneywell Vista 21ip Install GuideAlarm Grid Home Security and Alarm MonitoringNo ratings yet

- UA723Document13 pagesUA723rordenvNo ratings yet

- E-Sentinel Rack 500 - 2000vaDocument4 pagesE-Sentinel Rack 500 - 2000vaDragos ComanNo ratings yet

- ECE R15 CMM SheetDocument2 pagesECE R15 CMM SheetThil PaNo ratings yet

- Sklansky AdderDocument6 pagesSklansky AdderSaiDhoolamNo ratings yet

- F Series Handpunch Modem: Installation InstructionsDocument8 pagesF Series Handpunch Modem: Installation Instructionsmartin_jaitmanNo ratings yet

- Water Level Monitor Control and Alerting System Using GSM in Dams and Irrigation System Based On Season PDFDocument3 pagesWater Level Monitor Control and Alerting System Using GSM in Dams and Irrigation System Based On Season PDFLuthfan XetiaNo ratings yet

- Imperial Electric: Elevator Product LineDocument24 pagesImperial Electric: Elevator Product Lineِblacky WhityNo ratings yet

- Revised 4 Year Degree Scheme Syllabus-2 1449226988123Document346 pagesRevised 4 Year Degree Scheme Syllabus-2 1449226988123Ashish SharmaNo ratings yet

- Application of C Language in ElectronicsDocument21 pagesApplication of C Language in ElectronicsEvan AndrewNo ratings yet

- Laguna Kosmetik MC1 PlusDocument2 pagesLaguna Kosmetik MC1 PlusLaguna Karaoke TarakanNo ratings yet